Beowulf cluster: Difference between revisions

imported>Eric M Gearhart (Added NOTOC - article doesn't need a TOC yet) |

mNo edit summary |

||

| (2 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

{{subpages}} | |||

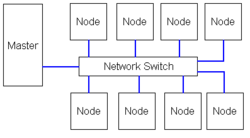

[[Image:Simple Beowulf Cluster Diagram.png|right|thumb|250px|In a Beowulf cluster, the network cables (shown in blue) that connect the eight nodes to the master serve as a [[Computer#How_computers_work:_the_stored_program_architecture|bus]]]] | [[Image:Simple Beowulf Cluster Diagram.png|right|thumb|250px|In a Beowulf cluster, the network cables (shown in blue) that connect the eight nodes to the master serve as a [[Computer#How_computers_work:_the_stored_program_architecture|bus]]]] | ||

| Line 5: | Line 7: | ||

The concept of clustering machines together in this way is known as [[distributed computing]]. | The concept of clustering machines together in this way is known as [[distributed computing]]. | ||

The Beowulf libraries provide facilities for using a global [[PID|process id]] (among the machines in the Beowulf), methods of [[remote execution]] of [[process|processes]] to run across the cluster, and more. The downside of the use of this library is that programs have to be specifically written to be run in a Beowulf, and have to be compiled with said libraries included. Newer clustering technology such as [[Mosix]] clusters address this limitation. <ref name="Donald Becker at NYLUG">{{cite web|url=http://ssadler.phy.bnl.gov/adler/DB/DonaldBecker.html|title="From Word Processors to Super Computers: Donald Becker Speaks about Beowulf at NYLUG"|date= | The Beowulf libraries provide facilities for using a global [[PID|process id]] (among the machines in the Beowulf), methods of [[remote execution]] of [[process|processes]] to run across the cluster, and more. The downside of the use of this library is that programs have to be specifically written to be run in a Beowulf, and have to be compiled with said libraries included. Newer clustering technology such as [[Mosix]] clusters address this limitation. <ref name="Donald Becker at NYLUG">{{cite web|url=http://ssadler.phy.bnl.gov/adler/DB/DonaldBecker.html|title="From Word Processors to Super Computers: Donald Becker Speaks about Beowulf at NYLUG"|date=Retrieved 11-April-2007}}</ref> | ||

==Beowulf Development== | ==Beowulf Development== | ||

In early 1993, NASA scientists [[Donald Becker]] and [[Thomas Sterling]] began sketching out the details of what would become a revolutionary way to build a cheap supercomputer: link low-cost desktops together with commodity, off the shelf (COTS) hardware and combine their performance.<ref name="The inside story of the Beowulf saga">{{cite web|url=http://www.gcn.com/print/24_8/35499-1.html|title="The inside story of the Beowulf saga"|date= | In early 1993, NASA scientists [[Donald Becker]] and [[Thomas Sterling]] began sketching out the details of what would become a revolutionary way to build a cheap supercomputer: link low-cost desktops together with commodity, off the shelf (COTS) hardware and combine their performance.<ref name="The inside story of the Beowulf saga">{{cite web|url=http://www.gcn.com/print/24_8/35499-1.html|title="The inside story of the Beowulf saga"|date=Retrieved 11-April-2007}}</ref> | ||

By 1994, under the sponsorship of the "High Performance Computing & Communications | By 1994, under the sponsorship of the "High Performance Computing & Communications | ||

for Earth & Space Sciences" (HPCC/ESS)<ref name="HPCC/ESS">{{cite web|url=http://www.lcp.nrl.navy.mil/hpcc-ess/|title="High Performance Computing & Communications for Earth & Space Sciences homepage"|date= | for Earth & Space Sciences" (HPCC/ESS)<ref name="HPCC/ESS">{{cite web|url=http://www.lcp.nrl.navy.mil/hpcc-ess/|title="High Performance Computing & Communications for Earth & Space Sciences homepage"|date=Retrieved 11-April-2007}}</ref> project, the Beowulf Parallel Workstation project at NASA's Goddard Space Flight Center had begun.<ref name="Becker Bio">{{cite web|url=http://www.beowulf.org/community/bio.html|title="Donald Becker's Bio at Beowulf.org"|date=Retrieved 11-April-2007}}</ref> | ||

<ref name="beowulf.org history">{{cite web|url=http://www.beowulf.org/overview/history.html|title="Beowulf History from beowulf.org"|date= | <ref name="beowulf.org history">{{cite web|url=http://www.beowulf.org/overview/history.html|title="Beowulf History from beowulf.org"|date=Retrieved 11-April-2007}}</ref> | ||

==Beowulf Implementation== | ==Beowulf Implementation== | ||

| Line 20: | Line 22: | ||

| url=http://www.beowulf.org/overview/index.html | | url=http://www.beowulf.org/overview/index.html | ||

| title="Beowulf Project Overview" | | title="Beowulf Project Overview" | ||

| date= | | date= Retrieved 08-April-2007 | ||

}}</ref> however this is not required, as both [[Mac OS X]] and [[FreeBSD]] clusters have been created.<ref name="OSX Beowulf">{{cite web | }}</ref> however this is not required, as both [[Mac OS X]] and [[FreeBSD]] clusters have been created.<ref name="OSX Beowulf">{{cite web | ||

| url=http://www.stat.ucla.edu/computing/clusters/deployment.php | | url=http://www.stat.ucla.edu/computing/clusters/deployment.php | ||

| title="Mac OS X Beowulf Cluster Deployment Notes" | | title="Mac OS X Beowulf Cluster Deployment Notes" | ||

| date= | | date= Retrieved 08-April-2007 | ||

}}</ref><ref name="OSX Beowulf Deployment">{{cite web | }}</ref><ref name="OSX Beowulf Deployment">{{cite web | ||

| url=http://docs.huihoo.com/hpc-cluster/mini-wulf/ | | url=http://docs.huihoo.com/hpc-cluster/mini-wulf/ | ||

| title="A small Beowulf Cluster running FreeBSD" | | title="A small Beowulf Cluster running FreeBSD" | ||

| date= | | date= Retrieved 08-April-2007 | ||

}}</ref> | }}</ref> | ||

| Line 35: | Line 37: | ||

It should be noted that more than 50 percent of the machines on the Top 500 List of supercomputers | It should be noted that more than 50 percent of the machines on the Top 500 List of supercomputers | ||

<ref name="Top 500">{{cite web|url=http://top500.org|title="TOP500 Supercomputer Sites"|date= | <ref name="Top 500">{{cite web|url=http://top500.org|title="TOP500 Supercomputer Sites"|date=Retrieved 11-April-2007}}</ref> are clusters of this sort.<ref name="The inside story of the Beowulf saga"/> | ||

==External links== | ==External links== | ||

| Line 43: | Line 45: | ||

{{reflist|2}} | {{reflist|2}} | ||

[[Category: | __NOTOC__[[Category:Suggestion Bot Tag]] | ||

Latest revision as of 06:00, 18 July 2024

A Beowulf cluster is a class of supercomputer, specifically one that utilizes "Commercial Off the Shelf" (COTS) hardware such as personal computers and Ethernet switches to link the machines together so that they act as one, and the Beowulf library of software which is used to help implement a distributed application.

The concept of clustering machines together in this way is known as distributed computing.

The Beowulf libraries provide facilities for using a global process id (among the machines in the Beowulf), methods of remote execution of processes to run across the cluster, and more. The downside of the use of this library is that programs have to be specifically written to be run in a Beowulf, and have to be compiled with said libraries included. Newer clustering technology such as Mosix clusters address this limitation. [1]

Beowulf Development

In early 1993, NASA scientists Donald Becker and Thomas Sterling began sketching out the details of what would become a revolutionary way to build a cheap supercomputer: link low-cost desktops together with commodity, off the shelf (COTS) hardware and combine their performance.[2]

By 1994, under the sponsorship of the "High Performance Computing & Communications for Earth & Space Sciences" (HPCC/ESS)[3] project, the Beowulf Parallel Workstation project at NASA's Goddard Space Flight Center had begun.[4] [5]

Beowulf Implementation

This type of cluster is composed of a 'master' (which coordinates the processing power of the cluster) and usually many 'nodes' (computers that actually perform the calculations). The 'master' typically is server-class, and has more horsepower (i.e. Memory and CPU power) than the individual nodes. The nodes in the cluster don't have to be identical, although to simplify deployment this is usually the case.

Usually the Beowulf 'nodes' are running Linux,[6] however this is not required, as both Mac OS X and FreeBSD clusters have been created.[7][8]

Popularity in High-Performance Computing

Today Beowulf systems are deployed worldwide as both as "cheap supercomputers" and as more traditional high-performance projects, chiefly in support of number crunching and scientific computing.

It should be noted that more than 50 percent of the machines on the Top 500 List of supercomputers [9] are clusters of this sort.[2]

External links

The Linux Beowulf HOWTO, from the Linux documentation project

References

- ↑ "From Word Processors to Super Computers: Donald Becker Speaks about Beowulf at NYLUG" (Retrieved 11-April-2007).

- ↑ 2.0 2.1 "The inside story of the Beowulf saga" (Retrieved 11-April-2007).

- ↑ "High Performance Computing & Communications for Earth & Space Sciences homepage" (Retrieved 11-April-2007).

- ↑ "Donald Becker's Bio at Beowulf.org" (Retrieved 11-April-2007).

- ↑ "Beowulf History from beowulf.org" (Retrieved 11-April-2007).

- ↑ "Beowulf Project Overview" (Retrieved 08-April-2007).

- ↑ "Mac OS X Beowulf Cluster Deployment Notes" (Retrieved 08-April-2007).

- ↑ "A small Beowulf Cluster running FreeBSD" (Retrieved 08-April-2007).

- ↑ "TOP500 Supercomputer Sites" (Retrieved 11-April-2007).