NoSQL: Difference between revisions

imported>Lj Huang No edit summary |

mNo edit summary |

||

| (31 intermediate revisions by 8 users not shown) | |||

| Line 1: | Line 1: | ||

{{subpages}} | {{subpages}} | ||

{{TOC|right}} | {{TOC|right}} | ||

'''NoSQL''' refers to a number of non-relational distributed database architectures. NoSQL architectures usually store data as key-value pairs, rather than supporting relations. Some systems eliminate the guarantee of consistency (instead promising eventual consistency) in order to increase scalability. The distributed nature of NoSQL architectures makes such data stores highly scalable and fault-tolerant. | '''NoSQL''' refers to a number of non-relational distributed database architectures. The term has generated much interest in the computing database world although developer Emil Eifrem suggested the term was not clearly defined and hyped.<ref>{{citation | ||

| first = Emil | |||

| last= Eifrem | |||

| url = http://www.infoq.com/presentations/Neo4j-NOSQL-and-Graph-Databases;jsessionid=ECD76E943F385EEAA354ACE4C328B150 | |||

| title = Neo4j: NOSQL and the Benefits of Graph Databases | |||

| publisher = QCon conference | |||

| date = 2010-07-14 | |||

}}</ref> The NoSQL abbreviation might be best understood not as excluding SQL but as meaning ''Not only SQL'', according to Eifrem.<ref>{{citation | |||

| first = Emil | |||

| last= Eifrem | |||

| url = http://www.infoq.com/presentations/Neo4j-NOSQL-and-Graph-Databases;jsessionid=ECD76E943F385EEAA354ACE4C328B150 | |||

| title = Neo4j: NOSQL and the Benefits of Graph Databases | |||

| publisher = QCon conference | |||

| date = 2010-07-14 | |||

}}</ref> NoSQL architectures usually store data as key-value pairs, rather than supporting relations. Some systems eliminate the guarantee of consistency (instead promising eventual consistency) in order to increase scalability. The distributed nature of NoSQL architectures makes such data stores highly scalable and fault-tolerant. | |||

==History== | ==History== | ||

The word "NoSQL" ( | ===Beginnings=== | ||

Databases were a chief component of the earliest computers. The earliest databases around the time when mainframe computers were prominent in the 1960s and 1970s had records with fixed individual fields. For example, a particular database might have had five fixed fields, such that a record might have data slots to hold a record's first name, last name, phone number, city, and employment status code. Accordingly, record number 10013 might have had the fields "Bill", "Robinson", 5551212, Dusseldorf, and hired. To ''query'' the database, a search would be done on all the records sequentially to see if they met a specified condition, such as pull every record of persons who lived in Dusseldorf, for example. | |||

===Relational databases=== | |||

As hardware and storage prices fell, yet data grew exponentially, new techniques were developed to hold data on dispersed computers rather than on one large centralized computer. Data could be kept in multiple computers over an internal network or even on computers throughout the world wide web. In a database management system, a table schema defines such things as tables, fields, relationships, views, indexes, functions, packages, procedures, and so forth, and was somewhat similar to a train network with different types of crews and machines tasked with maintaining parts of the rail network. | |||

It was possible to have a single large relational database running across lots of different computers. The new structures built upon the old ideas of column and row but added new dimensions. For example, every relational database has an object called a "table" which has columns or fields (with preset parameters) and rows containing the actual data.<ref>{{citation | |||

| url = http://www.sql-tutorial.net/ | |||

| title = SQL Tutorial - Learn SQL | |||

| journal = | |||

| date = 2010-09-15 | |||

}}</ref> There were huge advantages to the new structured data arrangements. It increased availability and reliability and allowed the database to expand. It could be tailored to reflect a firm's organizational setup or client needs. A fire in a central building wouldn't shut down the database, since other computers had copies of the valuable information. And different servers could trade off tasks, so that if one server became extremely busy, other servers could pick up the demand. And some so-called ''nodes'' could go offline and the system would continue to function because of "transparent replication" and "fault tolerance." Clients could access the database through a protocol (not directly to the individual nodes.) It was cheaper overall, and could operate continuously; in contrast, a single large centralized computer would have to be shut down for maintenance, preventing the database from working during the down times. And it was possible to break off one server or module to upgrade or expand it. But it wasn't all rosy, either. The distributed structure was more complex and often required extra work to keep the different computers synchronized with each other. Security considerations were a factor too -- with valuable data spread out, there were multiple places where a hacker could break into the system or steal it in transit. The whole system had to maintain integrity, including keeping standards and adding new software as needed. And how would duplicated data in different servers be kept what was called ''concurrent'' or up-to-date? | |||

{{Image|Linked list data format.jpg|right|400px|In a linked list data format, each record has one piece of information; the second piece points to the next record in a sequential format.}} | |||

New types of data structures were being developed. In a [[linked list (computing)|linked list]], each record would have two placeholders for information; the first would hold the specific data, and the second would be a [[link (computer)|link]] or reference which pointed to the next record. So whole series of records could be linked sequentially. It was possible to insert or delete a record provided that one adjusted the corresponding links and pointers on the records immediately before and immediately after the altered record. Usually linked list data structures had an extra record at the beginning and end of the list called a "sentinel", which had empty information and was used mostly to mark a list's beginning or end. It meant that software programs could process each real record in a way where there was always a record before and after it; this kept compilers from crashing. | |||

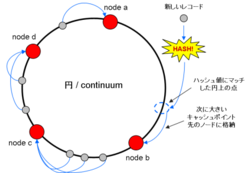

{{Image|B TREE DIAGRAM.jpg|right|400px|B-tree is a tree data structure that keeps data sorted and allows searches, sequential access, insertions, deletions in amortized time. They're highly efficient and used by many operating systems and languages such as Novell and MUMPS. They provide rapid access to stored data using textual keys. The idea is to enable the exact piece of data to be accessed with few steps.}} | |||

In a second type of data structure called a [[B-Tree (data structure)|B-tree]], data would be organized like a tree with branches, with records branching out from other records in a hierarchical format.<ref>{{citation | |||

| url = http://www.virtualmachinery.com/btreeguide.htm | |||

| title = The Theory of BTrees | |||

| publisher = Virtual Machinery | |||

| journal = BTree Guide | |||

| date = 2010-09-17 | |||

}}</ref> There were advantages to this arrangement as well. And some databases went from using straightforward row and column formats to using so-called [[hash tables (computing)|hash tables]], sometimes abbreviated as DHTs for "distributed hash tables". The ''hash'' was an intermediary function that took a piece of information called a ''key'', such as a person's name, and used various algorithms or mathematical rules to point to a given record in a mathematical array, or ''value''. They're sometimes called "key-value" data structures. A ''hash table'' is a data structure that uses a hash function to map identifying values known as keys (such as a person's name) to their associated values (such as a telephone number). The hash function transforms the key into the index (called the hash) of an array element (called a slot or bucket) where the corresponding value is to be sought. Hash collisions happen but must be dealt with by using the appropriate computer code. | |||

Programmers learned to work with techniques to keep far-flung computers on the same wavelength by such means as [[Replication (computing)|replication]] in which specialized software hunted for changes (when the software programs found changed data on one computer, they'd alert other computers). Another way was [[Duplication (computing)|duplication]] in which one server would be dubbed the ''master server'' and it would have the most important version of the data. At night or off-hours, it would be updated by satellite servers, and then the master database would copy itself to all the satellites (under one arrangement; many alternatives were possible.) This way, all the computers would copy themselves to be like the master. | |||

===Structured Query Language=== | |||

But with information scattered across the internet, how could questions be posed to the data? In 1970, Dr. E. F. Codd published a paper entitled ''A Relational Model of Data for Large Shared Data Banks''. His IBM colleagues Donald Chamberlin and Raymond Boyce worked on a way to specify queries as ''relational expressions''. As a result, a new way of analyzing databases known as ''Structured Query Language'' or SQL emerged which allowed users to search information across linked databases, and this proved to be a much more powerful and useful way of managing data. It didn't require database managers to rebuild new databases as new information came along; rather, they could link databases dynamically. SQL became the dominant language for what was called [[relational database systems]], sometimes abbreviated as '''RDBMS''' (Relational Data-Base Management Systems) and various high-level software programs were developed to manage such database systems, such as MySQL, Microsoft SQL Server, PostgreSQL, and Oracle RDBMS. | |||

New criteria were used to assess how well these relational databases were working. One set of rules <to classify these criteria caught on, and was dubbed [[ACID properties]] <!--which was an abbreviation for the following terms: | |||

* '''Atomicity''' (A) means all database modifications follow an "all or nothing" rule. If one part of the transaction fails, the whole transaction fails and the database is unchanged. This is particularly crucial with matters such as banking transactions; one wouldn't want a withdrawal to happen to one account while a second account wasn't be credited. Both parts would be part of a single transaction, and if one part of the transaction failed, then the whole thing would fail and the database would be back to square one (without any errors). | |||

* '''Consistency''' (C) means the database remains in a consistent state, that is, that any transaction will take the database from one consistent state to another consistent state. If there's a screw-up or failure, the database can be rolled back to the earlier consistent state and therefore still be intact and operable. | |||

* '''Isolation''' (I) means that when a transaction is happening, other operations can't tamper with it and possibly pollute the transaction. It's like a hospital in which only one patient is operated on a time to do only one surgical procedure; it's not allowed to have a second surgery team perform a face lift while the primary surgery team is removing a gall bladder, for example. Rather, the first transaction must be isolated, and completed, before any further transactions will be allowed. | |||

* '''Durability''' (D) means that changes to the database ''stick'' and are permanent. If there's a system failure such as a server crashing or a fire in a building, the transaction will not be lost, because there usually is something called a ''transaction log'' to help administrators or the program recover the data.--> | |||

By the late 1990s, however, problems were beginning to emerge, particularly when databases became vast with records numbering in the hundreds of millions of pieces of data, and programmers were searching for better ways to get at data efficiently. One alternative to relational databases has been called the "NoSQL" data stores, and it was conceived as an alternative to SQL.<ref> The end of SQL and relational databases? (part 1 of 3), David Intersimone, February 2, 2010, [http://blogs.computerworld.com/15510/the_end_of_sql_and_relational_databases_part_1_of_3 Computerworld]</ref> | |||

Datasets were growing exponentially. Sometimes users needed to know only pieces of the data, or to query some parts of databases but not others. How could questions be posed to these data sets efficiently? Programmers who had been used to the idea of "one size fits all" found that traditional solutions were becoming cumbersome. | |||

The word "NoSQL" ('''N'''ot '''O'''nly '''SQL''') was first used by Carlo Strozzi in 1998 referring to a file-based database he was developing, which is actually a [[relational database]] without a SQL interface. | |||

<blockquote> | |||

Only as the internet gave way to the masses and large scale concurrency and data generation ushered in a new era has the relational way of doing data management truly begun to break down, opening the door to alternatives.<small>Michael Stonebraker, November 2009</small><ref name=Stonebraker> The "NoSQL" Discussion has Nothing to Do With SQL, Michael Stonebraker, November 4, 2009, [http://cacm.acm.org/blogs/blog-cacm/50678-the-nosql-discussion-has-nothing-to-do-with-sql/fulltext Communications of the ACM]</ref> | |||

</blockquote> | |||

And, in contrast with the ACID criteria, a new set of criteria was being touted in place of the rather rigid ACID critera for relational databases. Accordingly, it was called [[BASE (computing)|BASE]] which was an acronym for "basically available soft-state eventual-consistency". Trade-offs were being made. It was no longer seen as super necessary to have all transactions being thoroughly consistent as long as they became consistent eventually, that is, information would become consistent in sufficient time to be useful, but not necessarily immediately. | |||

Firms such as [[Google]] were pioneering ways of handling extremely huge datasets. Google made a proprietary program called ''BigTable'' in 2004 which it used for many Google applications. It was extremely fast working on a large-scale database management system, not a traditional row and column database, but something designed to handle petabytes of information running across hundreds or thousands of machines. New machines could be added without much fuss. Each table had multiple dimensions including a field for the timestamp, which allowed features such as "versioning" and "garbage collection." Tables could be split into smaller entities called "tablets", and when data in the tablets grew out of control, the tablet data could be compressed using specialized algorithms. | |||

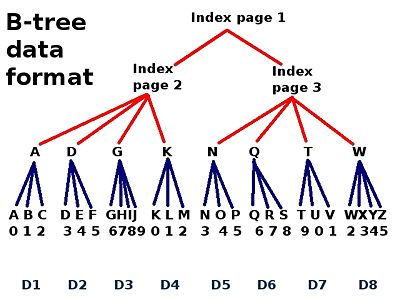

{{Image|Matrix Example Of NoSQL.jpg|right|400px|Emil Eifrem referred to characters from the movie ''The Matrix'' in a talk in July 2010 to demonstrate how data engineers are coping with complexity while trying to maintain speed and accuracy. Each character is a ''node'' with associated data such as "name", "age", and an ID. The database also records ''relations'' such as who knows who.}} | |||

NoSQL approaches could manage data that was distributed across multiple sites, sometimes including offline data stored on large disk drives. This was a so-called more ''scalable'' approach.<ref name=Stonebraker/> | |||

Some meaningful events during the evolvement of NoSQL include: MultiValue (aka PICK) databases developed in 1965; BerkeleyDB is created in 1996; Mnesia is developed by Ericsson as a soft real-time database to be used in telecom ( it does not use SQL as query language); CouchDB is started in 2005 and provides a document database which moves to the Apache Foundation in 2008; Google BigTable is started in 2004; Tokyo Cabinet is a successor to QDBM by (Mikio Hirabayashi) started in 2006; the research paper on Amazon Dynamo is released in 2007; the document database MongoDB is started in 2007 as a part of an open source cloud computing stack; Facebooks open sources the Cassandra project in 2008; Redis is persistent key-value store started in 2009; Riak is another dynamo-inspired database started in 2009; HBase is a BigTable clone for the Hadoop project while Hypertable is another BigTable type database also from 2009. In 2009, Eric Evans re-used it to describe a current surge in non-relational databases.<ref>{{citation | first = Knut | last = Haugen | url = http://blog.knuthaugen.no/2010/03/a-brief-history-of-nosql.html | title = A Brief History of NoSQL}}</ref> NoSQL databases tried to improve efficiency by removing the overhead and memory footprint of relational databases, thus speeding up searches. Other NoSQL approaches were more closely linked with programming languages and tied to web technologies, thus giving users new ways of querying data.<ref> The end of SQL and relational databases? (part 1 of 3), David Intersimone, February 2, 2010, , [http://blogs.computerworld.com/15510/the_end_of_sql_and_relational_databases_part_1_of_3 Computerworld]</ref> | |||

===Struggling to manage huge databases=== | |||

Software engineer Cédric Beust suggested the term NoSQL wasn't meant in opposition to SQL but rather was "outside" or beyond the traditional understanding of SQL: | |||

<blockquote> | |||

Google, Amazon, Facebook, and DARPA all recognized that when you scale systems large enough, you can never put enough iron in one place to get the job done (and you wouldn’t want to, to prevent a single point of failure). Once you accept that you have a distributed system, you need to give up consistency or availability, which the fundamental transactionality of traditional RDBMSs (relational database management systems) cannot abide. Based on the realization that something fundamentally different needed to be built, a lot of Very Smart People tackled the problem in a variety of different ways, making different trades along the way. Eventually, we all started getting together and trading ideas, and we realized that we needed some moniker to call all of these different databases that were not the traditional relational databases. The NoSQL name was coined more along the lines of “anything outside of the SQL part of the Venn diagram” rather than “opposed to SQL”. The NoSQL databases are a pragmatic response to growing scale of databases and the falling prices of commodity hardware. It’s not a noble counterculture movement (although it does attract the sort that have a great deal of mental flexibility), it’s just a way to get business done cheaper. ---<small>Cédric Beust</small><ref> NoSQL explained correctly (finally), Cédric Beust, February 25, 2010, [http://beust.com/weblog/2010/02/25/nosql-explained-correctly-finally/ Otaku-Cedric's Blog]</ref> | |||

</blockquote> | |||

But it is not clear whether the NoSQL technologies will replace traditional SQL relational databases or whether these are new tools to take SQL in new directions. Analyst Roberto V. Zicari in the ODBMS Industry Watch journal sees the important focus for the database industry in finding "the right tool for the job", and believes this "mantra" for the software industry is an "important change in attitude."<ref> Are object databases “NoSQL” technologies?, Roberto V. Zicari, November 24th, 2009, , [http://www.odbms.org/blog/2009/11/are-object-databases-nosql-technologies ODBMS Industry Watch]</ref> | |||

Proponents of NoSQL approaches complain about relational databases being slow, expensive, heavily "taxing" of computer resources. Springsource engineer Jon Travis quipped "relational databases give you too much. They force you to twist your object data to fit a relational database management system."<ref> No to SQL? Anti-database movement gains steam, Eric Lai, July 1, 2009, [http://www.computerworld.com/s/article/9135086/No_to_SQL_Anti_database_movement_gains_steam_ Computerworld]</ref> Travis sees NoSQL champions particularly among web and Java developers who have had to grow powerful data search capability without existing data storage solutions. They learned from firms such as Amazon and Google how to efficiently process large swaths of information –– including up to terabytes or even petabytes of information.<ref> No to SQL? Anti-database movement gains steam, Eric Lai, July 1, 2009, [http://www.computerworld.com/s/article/9135086/No_to_SQL_Anti_database_movement_gains_steam_ Computerworld]</ref> Firms like Cloudant write software which tries to work when data is generated in a distributed way, possibly by sensor networks, web servers, mobile storage service; the idea is to help small companies manage "big data", according to Alexia Tsotsis of Tech Crunch.<ref> YC-Funded Cloudant Launches Its NoSQL Cloud Database Platform, Alexia Tsotsis, Sep 3, 2010, [http://techcrunch.com/2010/09/03/cloudant/?utm_source=feedburner&utm_medium=feed&utm_campaign=Feed%3A+Techcrunch+%28TechCrunch%29 Tech Crunch]</ref> | |||

Analyst Julian Browne explained how new problems emerge when databases grow large and data exist across different servers in numerous places around the web. Suppose, for example, there are TWO users interested in buying a particular book online but there is only ONE copy available. But information about this one book for sale is held in several different computers. What's paramount is that the computers in this large distributed database coordinate with each other. So if one person buys the book with a mouse click, then the second person SEES (almost instantly) that the book is no longer available. As soon as the book is purchased, one computer must update all the other computers that the book has been sold. The idea is to prevent problems such as a situation in which two people try to order the same book.<ref> Brewer's CAP Theorem, Julian Browne, January 11, 2009, [http://www.julianbrowne.com/article/viewer/brewers-cap-theorem Brewer's Cap Theorem article]</ref> | |||

Browne commented on a theorem posed by software developer Eric Brewer about potential problems with large databases. Brewer supposed there were three aspects of extended databases: | |||

* a database is '''consistent''' if it operates entirely or doesn't operate. In the book buying example, if a database is consistent then only ONE person will be able to buy the book. | |||

* a database is '''available''' if working, that is, there's no "time out" or "not working" message. | |||

* '''partition tolerance''' is when the system can re-route communication links when temporary breaks, such as a chopped cable, cause so-called ''partitions''. The task is to keep computers synchronized with each other so they're all working on the same page, so to speak. | |||

Brewer's theory held that it was impossible for a large distributed database to have consistency AND availability AND partition tolerance. The most you could have? Two, supposed Brewer. MIT scientists Seth Gilbert and Nancy Lynch proved his theorem correct with their paper ''Brewer's Conjecture and the Feasibility of Consistent, Available, Partition-Tolerant Web Services''.<ref> Brewer's CAP Theorem, Julian Browne, January 11, 2009, [http://www.julianbrowne.com/article/viewer/brewers-cap-theorem Brewer's Cap Theorem article]</ref> | |||

Gilbert and Lynch explained: | |||

<blockquote> | |||

Interactions with web services are expected to behave in a transactional manner: operations commit or fail in their entirety (atomic), transactions never observe or result in inconsistent data (consistent), uncommitted transactions are isolated from each other (isolated), and once a transaction is committed it is permanent (durable). It is clearly important, for example, that billing information and commercial transaction records be handled with this type of strong consistency."---<small>Gilbert and Lynch</small><ref> Brewer's CAP Theorem, Julian Browne, click on the term "formally proved", January 11, 2009, [http://www.julianbrowne.com/article/viewer/brewers-cap-theorem Brewer's Cap Theorem article]</ref> | |||

</blockquote> | |||

{{Image|Data size versus data complexity.jpg|right|400px|In the NoSQL world of huge databases, trade-offs are inevitable, according to Eifrem. Key value stores are better at handling extra large datasets, while graph databases are better at handling complexity but they're the "most challenging" trying to get it to "scale to size".}} | |||

Relational databases have difficulty achieving all three objectives of consistency and availability and partition tolerance, so trade-offs are involved; for example, one tradeoff is to substitute "eventual consistency" (which takes longer but is hopefully just as good) for "consistency". Typically how these trade-offs are handled is the province of NoSQL. | |||

Database developer Emil Eifrem suggested in July 2010 that there were four trends in NoSQL: (1) Exponential growth in data "This year more new information will be generated than in all the previous years combined." (2) greater connectedness with more (and more sophisticated) hyperlinks (3) more information built around each node in what Eifrem terms ''semi-structure'' (unlike the 1970s when a worker had one title, today workers have seven to ten titles, and data must be sophisticated enough to reflect this) (4) a movement away from different applications sharing one data set in which he terms ''architecture''.<ref>{{citation | |||

| first = Emil | |||

| last= Eifrem | |||

| url = http://www.infoq.com/presentations/Neo4j-NOSQL-and-Graph-Databases;jsessionid=ECD76E943F385EEAA354ACE4C328B150 | |||

| title = Neo4j: NOSQL and the Benefits of Graph Databases | |||

| publisher = QCon conference | |||

| date = 2010-07-14 | |||

}}</ref> | |||

==NoSQL vs. RDBMS== | ==NoSQL vs. RDBMS== | ||

| Line 13: | Line 122: | ||

| title = Will NoSQL Databases Live Up to Their Promise? | | title = Will NoSQL Databases Live Up to Their Promise? | ||

| journal = Computer}}</ref> | | journal = Computer}}</ref> | ||

* '''Document stores''' have a database consisiting of a collection of (key, value) pairs along with a "payload".<ref> The "NoSQL" Discussion has Nothing to Do With SQL, Michael Stonebraker, November 4, 2009 , , [http://cacm.acm.org/blogs/blog-cacm/50678-the-nosql-discussion-has-nothing-to-do-with-sql/fulltext Communications of the ACM]</ref> | |||

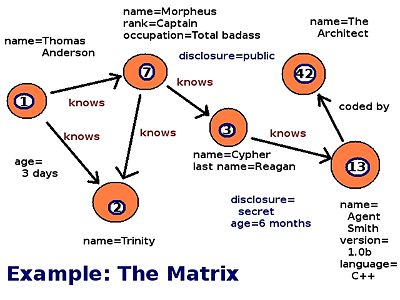

* '''Key-value stores''' have records which consist of a pair including a '''key''' and a '''payload''' which are analyzed using distributed hash tables (or DHTs). Examples include Memcachedb and Dynamo.<ref> The "NoSQL" Discussion has Nothing to Do With SQL, Michael Stonebraker, November 4, 2009 , , [http://cacm.acm.org/blogs/blog-cacm/50678-the-nosql-discussion-has-nothing-to-do-with-sql/fulltext Communications of the ACM]</ref> | |||

With these arrangements, the result of using these databases yields one record at a time rather than a typical SQL query. The NoSQL approach means that relational databases can be scalable both vertically and horizontally on several servers. A traditional SQL approach means that data is held on one server. If a second server is used, then the problem of how to synchronize data from both servers must be dealt with. In a NoSQL approach, however, it's easier for them to work across a so-called ''cloud of servers'', although they're somewhat limited to simpler requests for data. While a traditional relational database can be conceived as data organized in tables with rows (representing records) and columns (representing fields), NoSQL databases can be organized into objects, key–value pairs or tuples. A key benefit of NoSQL is marrying object-oriented programming to more traditional relational databases. Computerworld analyst David Intersimone believes, however, that relational databases will continue to be dominant in the foreseeable future, but concedes that NoSQL technologies may play a greater role.<ref> The end of SQL and relational databases? (part 1 of 3), David Intersimone, February 2, 2010, , [http://blogs.computerworld.com/15510/the_end_of_sql_and_relational_databases_part_1_of_3 Computerworld]</ref> | |||

==Disadvantages of NoSQL== | ==Disadvantages of NoSQL== | ||

NoSQL databases are fast for simple tasks, however, they may be time-consuming and difficult for more complex tasks because without SQL, they require manual query programming. | NoSQL databases are fast for simple tasks, however, they may be time-consuming and difficult for more complex tasks because without SQL, they require manual query programming. | ||

NoSQL databases are not as reliable as relational databases since they don't natively support ACID. If users want to apply ACID restraints to a data set, they need additional programming. Consistency is another issue incurred by not supporting ACID. If users are not familiar with the technology, they might not be able to determine that the approach is better for their purposes. In addition, many open source NoSQL applications don't have customer support or management tools yet. | NoSQL databases are not as reliable as relational databases since they don't natively support [[ACID properties]]. If users want to apply ACID restraints to a data set, they need additional programming. Consistency is another issue incurred by not supporting ACID. If users are not familiar with the technology, they might not be able to determine that the approach is better for their purposes. In addition, many open source NoSQL applications don't have customer support or management tools yet. | ||

Analyst Joab Jackson of IDG News suggested that NoSQL querying can be done but sometimes users would have to think differently about how to do the query. Accordingly, there is a learning curve to using the new approach. NoSQL approaches using CouchDB allows users to build a web application without a so-called "middle tier"; it eliminates the Java stack.<ref> CouchDB NoSQL Database Ready for Production Use , JOAB JACKSON of IDG News Service, July 14, 2010, [http://www.nytimes.com/external/idg/2010/07/14/14idg-couchdb-nosql-database-ready-for-production-use-58614.html?src=tp The New York Times]</ref> | |||

Disadvantages of NoSQL include lack of consistency as well as insufficient support for relational data. "Queries require re-architecting and recoding of existing products," according to one source.<ref> Xeround Launches SQL-Compliant Cloud Database, , Sep 14, 2010, , []</ref> | |||

==Relationship to cloud computing== | ==Relationship to cloud computing== | ||

The rise in popularity of NoSQL databases has been associated closely with the growth of cloud computing. Relational databases are not well suited to scaling across a large cluster of servers due to difficulty ensuring consistency and referential integrity as well as query performance in a distributed relational environment. NoSQL databases on the other hand are designed with a distributed environment in mind making them a perfect fit for the large clusters of commodity hardware that make up the cloud. | |||

Furthermore, the concessions made by cloud computing (immediate consistency in exchange for increased availability and partition tolerance) are also a good fit for many of the rich, non-[[OLTP]] applications that are sprouting up as part of the Web 2.0 movement and often hosted by cloud providers. The schemaless nature of NoSQL databases makes them an excellent tool for rapid prototyping and Agile development, both techniques commonly associated with cloud development, and since most leading implementations are FOSS, licensing for a large number of severs is not any issue. | |||

The efficiency of NoSQL in the cloud has given rise to a new class of offerings known as Database as a Service (DaaS) from a number of providers. Most notably, both Amazon ([[SimpleDB]]) and Google (in [[Google App Engine]]) have variations on a DaaS offering. | |||

<ref>[http://aws.amazon.com/simpledb/ Amazon Simple DB]</ref> | |||

<ref>[http://code.google.com/appengine/articles/datastore/overview.html Google App Engine Datastore]</ref> | |||

==Types of NoSQL Databases== | ==Types of NoSQL Databases== | ||

===Key-value Store=== | ===Key-value Store=== | ||

A key-value store maintains data as a pair consisting of an indexed key and a value. In general, key-value stores provide a single operation: fetching a single value using its key. Some key-value store implementations include mechanisms for performing a join on two distinct tables. Examples of key-value stores include Oracle's BerkeleyDB and Amazon's Dynamo. | A key-value store maintains data as a pair consisting of an indexed key and a value. In general, key-value stores provide a single operation: fetching a single value using its key. Some key-value store implementations include mechanisms for performing a join on two distinct tables. Examples of key-value stores include Oracle's BerkeleyDB and Amazon's Dynamo (a highly available key-value structured storage system with both properties of a relational database as well as distributed hash tables or DHTs.) | ||

====BerkeleyDB==== | ====BerkeleyDB==== | ||

{{Image|Consistent_hashing.png|right|250px|Distributed hash tables are used by many distribute key-value stores in order to spread data between many servers.}} | |||

BerkeleyDB is an open source, transactional, embedded database engine. It is available as a library that can be included in any application. Data are represented as key/value pairs. The keys and values in BerkeleyDB can be any objects supported by the programming language. Data are stored in files on disk, as a single file for each key-value store. BerkeleyDB also provides the option to maintain data stores in memory only, if the store is small enough to fit in main memory. | BerkeleyDB is an open source, transactional, embedded database engine. It is available as a library that can be included in any application. Data are represented as key/value pairs. The keys and values in BerkeleyDB can be any objects supported by the programming language. Data are stored in files on disk, as a single file for each key-value store. BerkeleyDB also provides the option to maintain data stores in memory only, if the store is small enough to fit in main memory. | ||

| Line 31: | Line 158: | ||

BerkeleyDB in itself does not provide a method for distributing data, but using a distributed hash table, it is possible to distribute data across multiple BerkeleyDB instances. | BerkeleyDB in itself does not provide a method for distributing data, but using a distributed hash table, it is possible to distribute data across multiple BerkeleyDB instances. | ||

==== | ====Cassandra==== | ||

Facebook's software engineers faced the problem of managing huge amounts of growing data spread out over hundreds and hundreds of nodes, some of which were failing or overloaded. The task was to keep the database working efficiently despite its huge and scattered nature. Engineers Avinash Lakshman and Prashant Malik explained in an article what they did: | |||

<blockquote> | |||

Cassandra is a distributed storage system for managing very large amounts of structured data spread out across many commodity servers, while providing highly available service with no single point of failure. Cassandra aims to run on top of an infrastructure of hundreds of nodes (possibly spread across different data centers). At this scale, small and large components fail continuously. The way Cassandra manages the persistent state in the face of these failures drives the reliability and scalability of the software systems relying on this service. While in many ways Cassandra resembles a database and shares many design and implementation strategies therewith, it does not support a full relational data model; instead, it provides clients with a simple data model that supports dynamic control over data layout and format. Cassandra system was designed to run on cheap commodity hardware and handle high write throughput while not sacrificing read efficiency.---<small>Lakshman & Malik</small><ref>{{citation | |||

|last1 = Lakshman | |||

|first1 = Avinash | |||

|last2 = Malik | |||

|first2 = Prashant | |||

| url = http://www.cs.cornell.edu/projects/ladis2009/papers/lakshman-ladis2009.pdf | |||

| title = Cassandra - A Decentralized Structured Storage System | |||

| publisher = Cornell University | |||

| pages = 6 | |||

}}</ref> | |||

</blockquote> | |||

Some data structures Cassandra uses are: | |||

* Column: Also called a tuple (triplet) containing a name, value and timestamp. This is the smallest Cassandra data container. | |||

* SuperColumn: A tuple with a name and a value, but no timestamp. | |||

* ColumnFamily: This is a data structure which can keep an infinite number of rows -- highly similar to the RDBMS concept of ''table''. The columnfamily's name is similar to a table name. | |||

* SuperColumnFamily: The largest container. | |||

* Keyspace: Like a schema, there is only one keyspace per application.<ref>{{citation | |||

| last= Cassandra Non-Relational Database Help | |||

| url = http://cassandra.nosqltutorial.com/articles/cassandra-data-model/ | |||

| title = Cassandra Tutorial Cassandra Non-Relational Database Help | |||

| date = September 14, 2010 | |||

| pages = | |||

}}</ref> | |||

====Project Voldemort==== | |||

Project Voldemort is a distributed key-value store that uses BerkeleyDB as its storage engine. It is developed and used by LinkedIn, and is written in Java. Like many distributed key-value stores, Project Voldemort uses a distributed hash table to distribute data between multiple servers, and to provide replication functionality. | |||

Interacting with a Project Voldemort DB is relatively simple:<ref>[http://project-voldemort.com/ Project Voldemort]</ref> | |||

<pre> | |||

String bootstrapUrl = "tcp://localhost:6666"; | |||

StoreClientFactory factory = new SocketStoreClientFactory(new ClientConfig().setBootstrapUrls(bootstrapUrl)); | |||

// create a client that executes operations on a single store | |||

StoreClient client = factory.getStoreClient("my_store_name"); | |||

// do some random pointless operations | |||

Versioned value = client.get("some_key"); | |||

value.setObject("some_value"); | |||

client.put("some_key", value); | |||

</pre> | |||

===Document-based Databases=== | |||

While key-value stores are an excellent solution for storing data in a distributed environment with near-linear access, many problem spaces necessitate data access patterns other than simple key based indexing. Moreover, many applications require elements of the robust feature set typically associated with a [[RDBMS]] but without the tradeoffs necessitated by normalized relational data. | |||

Document-based databases build on the basic concepts and architecture associated with key-value Stores but layer an advanced feature set on top of the core KV storage.<ref>{{citation | |||

| firs=Harri | last=Kauhanen | |||

| url = http://www.slideshare.net/harrikauhanen/nosql-3376398 | |||

| title = NoSQL databases | |||

| journal = Computer}}</ref> Common document-based database features include (but are not limited to): | |||

* Collections | |||

* Ad-Hoc Queries | |||

* Secondary Indexes | |||

* Views and Aggregation | |||

In a document-based database values in the underlying key-value storage are referred to as ''documents''. In place of keys generated by the client application, document-based databases use system generated ''document ids'' (an analog to the Primary Key in an RDBMS table). As a result all application data in a document-based database is stored in the documents. | |||

In order to support querying and other advanced operations, documents are stored as [[semi-structured data]]. | |||

====Semi-Structured Data==== | |||

Historically data in computer systems was divided into two categories: structured (databases) and unstructured (free-form text). Structured data assigns semantic meaning to information based on the structure in which it is stored (e.g. the row layout of a relational database table). For instance, a database of users can easily support a query such as "all users with last name Robin" by investigating the first name column of the users table. A search against a text file could only identify occurrences of the word Robin with no way to determine if it's a first name, a last name, or neither. | |||

Semi-structured data is a data storage strategy that assigns semantic meaning to information without the need for predefined structure. It does this by defining the semantics of the data along with the data itself. The most common format for semi-structured data is [[XML]] with [[JSON]] (JavaScript Object Notation) rapidly growing in popularity. | |||

====Collections==== | |||

Despite the schemaless nature of NoSQL databases it is often convenient to segregate different entity types in a document-based store for ease of querying. This is achieved using collections (also referred to as buckets or domains). Documents within the same collection should represent the same type of entity and as such all have a similar (though not identical) structure. | |||

Collections can be considered rough equivalent of relational tables, but while hierarchical entities must span several relational tables they are generally stored in a single collection (within a single document). | |||

====Ad-Hoc Queries==== | |||

Because documents are stored as semi-structured data, document-based databases can support basic queries against attribute values (such as the earlier example without requiring a predefined data schema). The query language varies depending on the data format used by a particular database (e.g. [[XPath]] for XML, Javascript for JSON). At least one implementation ([[RavenDB]]) supports the use of [[LINQ]] queries popular on the Microsoft .NET platform.<ref>[http://ravendb.net/ RavenDB]</ref> | |||

Ad-hoc queries are used only in the case of simple search predicates (usually =, ≠, >, <). More advanced queries are achieved using views, generally based on variations of the [[MapReduce]] algorithm. | |||

====Secondary Indexes==== | |||

In order to facilitate efficient queries on large data sets, many document-based databases support the ability to generate indexes on arbitrary data fields. Indexes are usually defined using a variation of the supported query language, for instance by replacing the search predicate with a boolean true in a Javascript query. | |||

Like documents themselves indexes are generally distributed across all nodes in a cluster (although the specific strategy varies by implementation). Once generated, indexes are updated when data is inserted (or when nodes receive the latest data due to eventual consistency). | |||

====Views and Aggregation==== | |||

Due to their distributed nature, document-based database are an excellent match for distributed aggregation algorithms such as [[MapReduce]]. In general any operation that would be performed against a relational database using a SQL GROUP BY clause and aggregate functions can be achieved using MapReduce against a document-based database. In fact the [[Apache Hive]] project contains functionality to translate ad-hoc queries written in a SQL-like syntax directly into MapReduce functions. | |||

<ref>[http://hadoop.apache.org/hive/ Apache Hive]</ref> | |||

The language used to define the MapReduce functions varies by implementation, but Javascript is most common. The Map portion of the function is used to select an initial set of data from a specified collection while Reduce is used to consolidate the data and calculate any desired aggregate values. | |||

Views are achieved by running MapReduce with a nominal pass-through Reduce function. Map functions can be used to perform substantial transformations on the document data before returning it to the result set. This power can be used in any number of ways, for instance picking only certain fields from a heterogenous collection to return a homogenous result. A single call to the Map function is permitted to produce several emits. [[Apache CouchDB]] has omitted an ad-hoc query function focusing instead on the substantially more powerful MapReduce engine. | |||

<ref>[http://couchdb.apache.org/ Apache CouchDB]</ref><ref> CouchDB NoSQL Database Ready for Production Use , JOAB JACKSON of IDG News Service, July 14, 2010, [http://www.nytimes.com/external/idg/2010/07/14/14idg-couchdb-nosql-database-ready-for-production-use-58614.html?src=tp The New York Times]</ref> | |||

====Implementation Specific Features==== | |||

*In-Place Document Editing | |||

*Persistent Views | |||

*Multi-Version Concurrency Control (MVCC) | |||

*Advanced Conflict Resolution | |||

*Configurable Consistency/Redundancy Options | |||

*Simple References | |||

====Popular Document-Based Databases==== | |||

*Software | |||

**Apache Couch DB (Erlang) | |||

**Mongo DB (C++) | |||

**Fleet DB (Clojure) | |||

**Riak (C and Erlang) | |||

**Raven (.NET, Supports LINQ) | |||

*Database as a Service (DaaS) | |||

**Amazon SimpleDB (Erlang) | |||

**Cloudant - Hosted Couch DB<ref> YC-Funded Cloudant Launches Its NoSQL Cloud Database Platform, Alexia Tsotsis, Sep 3, 2010, [http://techcrunch.com/2010/09/03/cloudant/?utm_source=feedburner&utm_medium=feed&utm_campaign=Feed%3A+Techcrunch+%28TechCrunch%29 Tech Crunch]</ref> | |||

**Mongo HQ - Hosted Mongo DB | |||

===Column-oriented Databases=== | ===Column-oriented Databases=== | ||

The column oriented database stores entries by column as opposed to row-oriented databases. This optimizes the SQL databases by making data aggregation easier and maximizing disk performance. Examples of open-source and commercial column oriented databases include: Cassandra(Facebook), Big Table(Google), Hypertable(Open-source implementation of Big Table), Hbase(Open-source implementation of Big Table), etc. | The column oriented database stores entries by column as opposed to row-oriented databases. This optimizes the SQL databases by making data aggregation easier and maximizing disk performance. Examples of open-source and commercial column oriented databases include: Cassandra(Facebook), Big Table(Google), Hypertable(Open-source implementation of Big Table), Hbase(Open-source implementation of Big Table), etc. | ||

==== | ====BigTable==== | ||

Bigtable is a distributed storage system for managing structured data, which is designed to scale to petabytes of data reliably | Bigtable is a distributed storage system for managing structured data, which is designed to scale to petabytes of data reliably and was developed by Google around 2004 and is used for more than sixty Google products.<ref> Fay Chang, Jeffrey Dean, etc. [http://labs.google.com/papers/bigtable.html Bigtable: A Distributed Storage System for Structured Data] OSDI'06</ref> It's not a traditional row and column database but rather can be conceived as a multi-dimensional sorted map which can be indexed by a row key, column key, and a timestamp. For example, to store a large collection of web pages, URLs would be used as row keys and aspects of web pages could be used as column names. Every read or write of data under a single row key is atomic and columns can be dynamically added. The timestamps help sychronize data across the system because they enable computers to see which version is the most up-to-date. Rows are sorted lexicographically. Consecutive keys are grouped as "tablets". Column keys are grouped into sets called "column families". Column key is named using the syntax: family: qualifier. Access control and disk/memory accounting are at the column family level. The data design includes creating and deleting tables and column families, changing cluster, table and column family metadata like access of control rights. Clients can write and read values, scan row ranges, perform single-row transactions, map, and reduce integration. | ||

Overall Bigtable provides clients with a simple data model that supports dynamic control over data layout and format and promises great performance and high availability and enables users to scale the capacity of their clusters by simply adding more machines to the system. | |||

<pre> | |||

// Open the table | |||

Table *T = OpenOrDie("/bigtable/web/webtable"); | |||

// Write a new anchor and delete an old anchor | |||

RowMutation r1(T, "com.cnn.www"); | |||

r1.Set("anchor:www.c-span.org", "CNN"); | |||

r1.Delete("anchor:www.abc.com"); | |||

Operation op; | |||

Apply(&op, &r1); | |||

// Read from a table | |||

Scanner scanner(T); | |||

ScanStream *stream; | |||

stream = scanner.FetchColumnFamily("anchor"); | |||

stream->SetReturnAllVersions(); | |||

scanner.Lookup("com.cnn.www"); | |||

for (; !stream->Done(); stream->Next()) { | |||

printf("%s %s %lld %s\n", | |||

scanner.RowName(), | |||

stream->ColumnName(), | |||

stream->MicroTimestamp(), | |||

stream->Value()); | |||

} | |||

</pre> | |||

It offers scalability and better performance control. It's extremely fast and exteremly large-scale DBMS. It's not a traditional row & column database; rather it's designed to scale into the petabyte range across hundreds or thousands of machines. It's easy to add more machines to the system without reconfiguration. Each table has multiple dimensions one of which is a field for time (allowing versioning and garbage collection). Tables can be split into multiple tablets. If things get big, tablets can be copmressed. | |||

==Future perspective== | ==Future perspective== | ||

| Line 47: | Line 310: | ||

== References == | == References == | ||

{{reflist|2}} | {{reflist|2}}[[Category:Suggestion Bot Tag]] | ||

Latest revision as of 06:01, 26 September 2024

NoSQL refers to a number of non-relational distributed database architectures. The term has generated much interest in the computing database world although developer Emil Eifrem suggested the term was not clearly defined and hyped.[1] The NoSQL abbreviation might be best understood not as excluding SQL but as meaning Not only SQL, according to Eifrem.[2] NoSQL architectures usually store data as key-value pairs, rather than supporting relations. Some systems eliminate the guarantee of consistency (instead promising eventual consistency) in order to increase scalability. The distributed nature of NoSQL architectures makes such data stores highly scalable and fault-tolerant.

History

Beginnings

Databases were a chief component of the earliest computers. The earliest databases around the time when mainframe computers were prominent in the 1960s and 1970s had records with fixed individual fields. For example, a particular database might have had five fixed fields, such that a record might have data slots to hold a record's first name, last name, phone number, city, and employment status code. Accordingly, record number 10013 might have had the fields "Bill", "Robinson", 5551212, Dusseldorf, and hired. To query the database, a search would be done on all the records sequentially to see if they met a specified condition, such as pull every record of persons who lived in Dusseldorf, for example.

Relational databases

As hardware and storage prices fell, yet data grew exponentially, new techniques were developed to hold data on dispersed computers rather than on one large centralized computer. Data could be kept in multiple computers over an internal network or even on computers throughout the world wide web. In a database management system, a table schema defines such things as tables, fields, relationships, views, indexes, functions, packages, procedures, and so forth, and was somewhat similar to a train network with different types of crews and machines tasked with maintaining parts of the rail network.

It was possible to have a single large relational database running across lots of different computers. The new structures built upon the old ideas of column and row but added new dimensions. For example, every relational database has an object called a "table" which has columns or fields (with preset parameters) and rows containing the actual data.[3] There were huge advantages to the new structured data arrangements. It increased availability and reliability and allowed the database to expand. It could be tailored to reflect a firm's organizational setup or client needs. A fire in a central building wouldn't shut down the database, since other computers had copies of the valuable information. And different servers could trade off tasks, so that if one server became extremely busy, other servers could pick up the demand. And some so-called nodes could go offline and the system would continue to function because of "transparent replication" and "fault tolerance." Clients could access the database through a protocol (not directly to the individual nodes.) It was cheaper overall, and could operate continuously; in contrast, a single large centralized computer would have to be shut down for maintenance, preventing the database from working during the down times. And it was possible to break off one server or module to upgrade or expand it. But it wasn't all rosy, either. The distributed structure was more complex and often required extra work to keep the different computers synchronized with each other. Security considerations were a factor too -- with valuable data spread out, there were multiple places where a hacker could break into the system or steal it in transit. The whole system had to maintain integrity, including keeping standards and adding new software as needed. And how would duplicated data in different servers be kept what was called concurrent or up-to-date?

New types of data structures were being developed. In a linked list, each record would have two placeholders for information; the first would hold the specific data, and the second would be a link or reference which pointed to the next record. So whole series of records could be linked sequentially. It was possible to insert or delete a record provided that one adjusted the corresponding links and pointers on the records immediately before and immediately after the altered record. Usually linked list data structures had an extra record at the beginning and end of the list called a "sentinel", which had empty information and was used mostly to mark a list's beginning or end. It meant that software programs could process each real record in a way where there was always a record before and after it; this kept compilers from crashing.

B-tree is a tree data structure that keeps data sorted and allows searches, sequential access, insertions, deletions in amortized time. They're highly efficient and used by many operating systems and languages such as Novell and MUMPS. They provide rapid access to stored data using textual keys. The idea is to enable the exact piece of data to be accessed with few steps.

In a second type of data structure called a B-tree, data would be organized like a tree with branches, with records branching out from other records in a hierarchical format.[4] There were advantages to this arrangement as well. And some databases went from using straightforward row and column formats to using so-called hash tables, sometimes abbreviated as DHTs for "distributed hash tables". The hash was an intermediary function that took a piece of information called a key, such as a person's name, and used various algorithms or mathematical rules to point to a given record in a mathematical array, or value. They're sometimes called "key-value" data structures. A hash table is a data structure that uses a hash function to map identifying values known as keys (such as a person's name) to their associated values (such as a telephone number). The hash function transforms the key into the index (called the hash) of an array element (called a slot or bucket) where the corresponding value is to be sought. Hash collisions happen but must be dealt with by using the appropriate computer code.

Programmers learned to work with techniques to keep far-flung computers on the same wavelength by such means as replication in which specialized software hunted for changes (when the software programs found changed data on one computer, they'd alert other computers). Another way was duplication in which one server would be dubbed the master server and it would have the most important version of the data. At night or off-hours, it would be updated by satellite servers, and then the master database would copy itself to all the satellites (under one arrangement; many alternatives were possible.) This way, all the computers would copy themselves to be like the master.

Structured Query Language

But with information scattered across the internet, how could questions be posed to the data? In 1970, Dr. E. F. Codd published a paper entitled A Relational Model of Data for Large Shared Data Banks. His IBM colleagues Donald Chamberlin and Raymond Boyce worked on a way to specify queries as relational expressions. As a result, a new way of analyzing databases known as Structured Query Language or SQL emerged which allowed users to search information across linked databases, and this proved to be a much more powerful and useful way of managing data. It didn't require database managers to rebuild new databases as new information came along; rather, they could link databases dynamically. SQL became the dominant language for what was called relational database systems, sometimes abbreviated as RDBMS (Relational Data-Base Management Systems) and various high-level software programs were developed to manage such database systems, such as MySQL, Microsoft SQL Server, PostgreSQL, and Oracle RDBMS.

New criteria were used to assess how well these relational databases were working. One set of rules <to classify these criteria caught on, and was dubbed ACID properties

By the late 1990s, however, problems were beginning to emerge, particularly when databases became vast with records numbering in the hundreds of millions of pieces of data, and programmers were searching for better ways to get at data efficiently. One alternative to relational databases has been called the "NoSQL" data stores, and it was conceived as an alternative to SQL.[5]

Datasets were growing exponentially. Sometimes users needed to know only pieces of the data, or to query some parts of databases but not others. How could questions be posed to these data sets efficiently? Programmers who had been used to the idea of "one size fits all" found that traditional solutions were becoming cumbersome.

The word "NoSQL" (Not Only SQL) was first used by Carlo Strozzi in 1998 referring to a file-based database he was developing, which is actually a relational database without a SQL interface.

Only as the internet gave way to the masses and large scale concurrency and data generation ushered in a new era has the relational way of doing data management truly begun to break down, opening the door to alternatives.Michael Stonebraker, November 2009[6]

And, in contrast with the ACID criteria, a new set of criteria was being touted in place of the rather rigid ACID critera for relational databases. Accordingly, it was called BASE which was an acronym for "basically available soft-state eventual-consistency". Trade-offs were being made. It was no longer seen as super necessary to have all transactions being thoroughly consistent as long as they became consistent eventually, that is, information would become consistent in sufficient time to be useful, but not necessarily immediately.

Firms such as Google were pioneering ways of handling extremely huge datasets. Google made a proprietary program called BigTable in 2004 which it used for many Google applications. It was extremely fast working on a large-scale database management system, not a traditional row and column database, but something designed to handle petabytes of information running across hundreds or thousands of machines. New machines could be added without much fuss. Each table had multiple dimensions including a field for the timestamp, which allowed features such as "versioning" and "garbage collection." Tables could be split into smaller entities called "tablets", and when data in the tablets grew out of control, the tablet data could be compressed using specialized algorithms.

Emil Eifrem referred to characters from the movie The Matrix in a talk in July 2010 to demonstrate how data engineers are coping with complexity while trying to maintain speed and accuracy. Each character is a node with associated data such as "name", "age", and an ID. The database also records relations such as who knows who.

NoSQL approaches could manage data that was distributed across multiple sites, sometimes including offline data stored on large disk drives. This was a so-called more scalable approach.[6]

Some meaningful events during the evolvement of NoSQL include: MultiValue (aka PICK) databases developed in 1965; BerkeleyDB is created in 1996; Mnesia is developed by Ericsson as a soft real-time database to be used in telecom ( it does not use SQL as query language); CouchDB is started in 2005 and provides a document database which moves to the Apache Foundation in 2008; Google BigTable is started in 2004; Tokyo Cabinet is a successor to QDBM by (Mikio Hirabayashi) started in 2006; the research paper on Amazon Dynamo is released in 2007; the document database MongoDB is started in 2007 as a part of an open source cloud computing stack; Facebooks open sources the Cassandra project in 2008; Redis is persistent key-value store started in 2009; Riak is another dynamo-inspired database started in 2009; HBase is a BigTable clone for the Hadoop project while Hypertable is another BigTable type database also from 2009. In 2009, Eric Evans re-used it to describe a current surge in non-relational databases.[7] NoSQL databases tried to improve efficiency by removing the overhead and memory footprint of relational databases, thus speeding up searches. Other NoSQL approaches were more closely linked with programming languages and tied to web technologies, thus giving users new ways of querying data.[8]

Struggling to manage huge databases

Software engineer Cédric Beust suggested the term NoSQL wasn't meant in opposition to SQL but rather was "outside" or beyond the traditional understanding of SQL:

Google, Amazon, Facebook, and DARPA all recognized that when you scale systems large enough, you can never put enough iron in one place to get the job done (and you wouldn’t want to, to prevent a single point of failure). Once you accept that you have a distributed system, you need to give up consistency or availability, which the fundamental transactionality of traditional RDBMSs (relational database management systems) cannot abide. Based on the realization that something fundamentally different needed to be built, a lot of Very Smart People tackled the problem in a variety of different ways, making different trades along the way. Eventually, we all started getting together and trading ideas, and we realized that we needed some moniker to call all of these different databases that were not the traditional relational databases. The NoSQL name was coined more along the lines of “anything outside of the SQL part of the Venn diagram” rather than “opposed to SQL”. The NoSQL databases are a pragmatic response to growing scale of databases and the falling prices of commodity hardware. It’s not a noble counterculture movement (although it does attract the sort that have a great deal of mental flexibility), it’s just a way to get business done cheaper. ---Cédric Beust[9]

But it is not clear whether the NoSQL technologies will replace traditional SQL relational databases or whether these are new tools to take SQL in new directions. Analyst Roberto V. Zicari in the ODBMS Industry Watch journal sees the important focus for the database industry in finding "the right tool for the job", and believes this "mantra" for the software industry is an "important change in attitude."[10]

Proponents of NoSQL approaches complain about relational databases being slow, expensive, heavily "taxing" of computer resources. Springsource engineer Jon Travis quipped "relational databases give you too much. They force you to twist your object data to fit a relational database management system."[11] Travis sees NoSQL champions particularly among web and Java developers who have had to grow powerful data search capability without existing data storage solutions. They learned from firms such as Amazon and Google how to efficiently process large swaths of information –– including up to terabytes or even petabytes of information.[12] Firms like Cloudant write software which tries to work when data is generated in a distributed way, possibly by sensor networks, web servers, mobile storage service; the idea is to help small companies manage "big data", according to Alexia Tsotsis of Tech Crunch.[13]

Analyst Julian Browne explained how new problems emerge when databases grow large and data exist across different servers in numerous places around the web. Suppose, for example, there are TWO users interested in buying a particular book online but there is only ONE copy available. But information about this one book for sale is held in several different computers. What's paramount is that the computers in this large distributed database coordinate with each other. So if one person buys the book with a mouse click, then the second person SEES (almost instantly) that the book is no longer available. As soon as the book is purchased, one computer must update all the other computers that the book has been sold. The idea is to prevent problems such as a situation in which two people try to order the same book.[14]

Browne commented on a theorem posed by software developer Eric Brewer about potential problems with large databases. Brewer supposed there were three aspects of extended databases:

- a database is consistent if it operates entirely or doesn't operate. In the book buying example, if a database is consistent then only ONE person will be able to buy the book.

- a database is available if working, that is, there's no "time out" or "not working" message.

- partition tolerance is when the system can re-route communication links when temporary breaks, such as a chopped cable, cause so-called partitions. The task is to keep computers synchronized with each other so they're all working on the same page, so to speak.

Brewer's theory held that it was impossible for a large distributed database to have consistency AND availability AND partition tolerance. The most you could have? Two, supposed Brewer. MIT scientists Seth Gilbert and Nancy Lynch proved his theorem correct with their paper Brewer's Conjecture and the Feasibility of Consistent, Available, Partition-Tolerant Web Services.[15]

Gilbert and Lynch explained:

Interactions with web services are expected to behave in a transactional manner: operations commit or fail in their entirety (atomic), transactions never observe or result in inconsistent data (consistent), uncommitted transactions are isolated from each other (isolated), and once a transaction is committed it is permanent (durable). It is clearly important, for example, that billing information and commercial transaction records be handled with this type of strong consistency."---Gilbert and Lynch[16]

In the NoSQL world of huge databases, trade-offs are inevitable, according to Eifrem. Key value stores are better at handling extra large datasets, while graph databases are better at handling complexity but they're the "most challenging" trying to get it to "scale to size".

Relational databases have difficulty achieving all three objectives of consistency and availability and partition tolerance, so trade-offs are involved; for example, one tradeoff is to substitute "eventual consistency" (which takes longer but is hopefully just as good) for "consistency". Typically how these trade-offs are handled is the province of NoSQL.

Database developer Emil Eifrem suggested in July 2010 that there were four trends in NoSQL: (1) Exponential growth in data "This year more new information will be generated than in all the previous years combined." (2) greater connectedness with more (and more sophisticated) hyperlinks (3) more information built around each node in what Eifrem terms semi-structure (unlike the 1970s when a worker had one title, today workers have seven to ten titles, and data must be sophisticated enough to reflect this) (4) a movement away from different applications sharing one data set in which he terms architecture.[17]

NoSQL vs. RDBMS

In many cases, NoSQL databases can process data more quickly than traditional relational database management systems. One reason for this is that data representation in NoSQL databases is much simpler than in relational systems. For example, a table in a relational database might have many columns, but data in a key-value store will always have only two parts: the key and the value. In addition, many NoSQL databases do not fully support ACID transactions. While this allows faster performance, it can also be risky in applications where precision is needed, such as in banking applications.[18]

- Document stores have a database consisiting of a collection of (key, value) pairs along with a "payload".[19]

- Key-value stores have records which consist of a pair including a key and a payload which are analyzed using distributed hash tables (or DHTs). Examples include Memcachedb and Dynamo.[20]

With these arrangements, the result of using these databases yields one record at a time rather than a typical SQL query. The NoSQL approach means that relational databases can be scalable both vertically and horizontally on several servers. A traditional SQL approach means that data is held on one server. If a second server is used, then the problem of how to synchronize data from both servers must be dealt with. In a NoSQL approach, however, it's easier for them to work across a so-called cloud of servers, although they're somewhat limited to simpler requests for data. While a traditional relational database can be conceived as data organized in tables with rows (representing records) and columns (representing fields), NoSQL databases can be organized into objects, key–value pairs or tuples. A key benefit of NoSQL is marrying object-oriented programming to more traditional relational databases. Computerworld analyst David Intersimone believes, however, that relational databases will continue to be dominant in the foreseeable future, but concedes that NoSQL technologies may play a greater role.[21]

Disadvantages of NoSQL

NoSQL databases are fast for simple tasks, however, they may be time-consuming and difficult for more complex tasks because without SQL, they require manual query programming. NoSQL databases are not as reliable as relational databases since they don't natively support ACID properties. If users want to apply ACID restraints to a data set, they need additional programming. Consistency is another issue incurred by not supporting ACID. If users are not familiar with the technology, they might not be able to determine that the approach is better for their purposes. In addition, many open source NoSQL applications don't have customer support or management tools yet.

Analyst Joab Jackson of IDG News suggested that NoSQL querying can be done but sometimes users would have to think differently about how to do the query. Accordingly, there is a learning curve to using the new approach. NoSQL approaches using CouchDB allows users to build a web application without a so-called "middle tier"; it eliminates the Java stack.[22]

Disadvantages of NoSQL include lack of consistency as well as insufficient support for relational data. "Queries require re-architecting and recoding of existing products," according to one source.[23]

Relationship to cloud computing

The rise in popularity of NoSQL databases has been associated closely with the growth of cloud computing. Relational databases are not well suited to scaling across a large cluster of servers due to difficulty ensuring consistency and referential integrity as well as query performance in a distributed relational environment. NoSQL databases on the other hand are designed with a distributed environment in mind making them a perfect fit for the large clusters of commodity hardware that make up the cloud.

Furthermore, the concessions made by cloud computing (immediate consistency in exchange for increased availability and partition tolerance) are also a good fit for many of the rich, non-OLTP applications that are sprouting up as part of the Web 2.0 movement and often hosted by cloud providers. The schemaless nature of NoSQL databases makes them an excellent tool for rapid prototyping and Agile development, both techniques commonly associated with cloud development, and since most leading implementations are FOSS, licensing for a large number of severs is not any issue.

The efficiency of NoSQL in the cloud has given rise to a new class of offerings known as Database as a Service (DaaS) from a number of providers. Most notably, both Amazon (SimpleDB) and Google (in Google App Engine) have variations on a DaaS offering. [24] [25]

Types of NoSQL Databases

Key-value Store

A key-value store maintains data as a pair consisting of an indexed key and a value. In general, key-value stores provide a single operation: fetching a single value using its key. Some key-value store implementations include mechanisms for performing a join on two distinct tables. Examples of key-value stores include Oracle's BerkeleyDB and Amazon's Dynamo (a highly available key-value structured storage system with both properties of a relational database as well as distributed hash tables or DHTs.)

BerkeleyDB

BerkeleyDB is an open source, transactional, embedded database engine. It is available as a library that can be included in any application. Data are represented as key/value pairs. The keys and values in BerkeleyDB can be any objects supported by the programming language. Data are stored in files on disk, as a single file for each key-value store. BerkeleyDB also provides the option to maintain data stores in memory only, if the store is small enough to fit in main memory.

BerkeleyDB provides a number of features competitive with relational databases, including support for transactions, two-phase locking, joins, and write ahead logging. These features make BerkeleyDB very reliable. The BerkeleyDB engine is used in a number of applications. The MySQL database management system offers BerkeleyDB as an option for the storage engine.[26]

BerkeleyDB in itself does not provide a method for distributing data, but using a distributed hash table, it is possible to distribute data across multiple BerkeleyDB instances.

Cassandra

Facebook's software engineers faced the problem of managing huge amounts of growing data spread out over hundreds and hundreds of nodes, some of which were failing or overloaded. The task was to keep the database working efficiently despite its huge and scattered nature. Engineers Avinash Lakshman and Prashant Malik explained in an article what they did:

Cassandra is a distributed storage system for managing very large amounts of structured data spread out across many commodity servers, while providing highly available service with no single point of failure. Cassandra aims to run on top of an infrastructure of hundreds of nodes (possibly spread across different data centers). At this scale, small and large components fail continuously. The way Cassandra manages the persistent state in the face of these failures drives the reliability and scalability of the software systems relying on this service. While in many ways Cassandra resembles a database and shares many design and implementation strategies therewith, it does not support a full relational data model; instead, it provides clients with a simple data model that supports dynamic control over data layout and format. Cassandra system was designed to run on cheap commodity hardware and handle high write throughput while not sacrificing read efficiency.---Lakshman & Malik[27]

Some data structures Cassandra uses are:

- Column: Also called a tuple (triplet) containing a name, value and timestamp. This is the smallest Cassandra data container.

- SuperColumn: A tuple with a name and a value, but no timestamp.

- ColumnFamily: This is a data structure which can keep an infinite number of rows -- highly similar to the RDBMS concept of table. The columnfamily's name is similar to a table name.

- SuperColumnFamily: The largest container.

- Keyspace: Like a schema, there is only one keyspace per application.[28]

Project Voldemort

Project Voldemort is a distributed key-value store that uses BerkeleyDB as its storage engine. It is developed and used by LinkedIn, and is written in Java. Like many distributed key-value stores, Project Voldemort uses a distributed hash table to distribute data between multiple servers, and to provide replication functionality.

Interacting with a Project Voldemort DB is relatively simple:[29]

String bootstrapUrl = "tcp://localhost:6666";

StoreClientFactory factory = new SocketStoreClientFactory(new ClientConfig().setBootstrapUrls(bootstrapUrl));

// create a client that executes operations on a single store

StoreClient client = factory.getStoreClient("my_store_name");

// do some random pointless operations

Versioned value = client.get("some_key");

value.setObject("some_value");

client.put("some_key", value);

Document-based Databases