Journal impact factor: Difference between revisions

imported>ZachPruckowski m (→External links: - as long as I'm making minor changes to this page, may as well get the IWs.) |

mNo edit summary |

||

| (42 intermediate revisions by 10 users not shown) | |||

| Line 1: | Line 1: | ||

{{subpages}} | |||

The '''journal impact factor''', very often abbreviated '''IF''' (dropping the ''journal'' in its name), is a measure of the citations to [[scientific journal|science and social science journals]].<ref name="pmid16391221">{{cite journal| author=Garfield E| title=The history and meaning of the journal impact factor. | journal=JAMA | year= 2006 | volume= 295 | issue= 1 | pages= 90-3 | pmid=16391221 | |||

| url=http://www.ncbi.nlm.nih.gov/entrez/eutils/elink.fcgi?dbfrom=pubmed&retmode=ref&cmd=prlinks&id=16391221 | doi=10.1001/jama.295.1.90 }} </ref><ref name="pmid16324222">{{cite journal |author=Dong P, Loh M, Mondry A |title=The "impact factor" revisited |journal=Biomed Digit Libr |volume=2 |issue= |pages=7 |year=2005 |month=December |pmid=16324222 |doi=10.1186/1742-5581-2-7 |url=http://www.bio-diglib.com/content/2//7 |issn=}}</ref> It is frequently used as a [[proxy (statistics)|proxy]] for the importance of a journal to its field. | |||

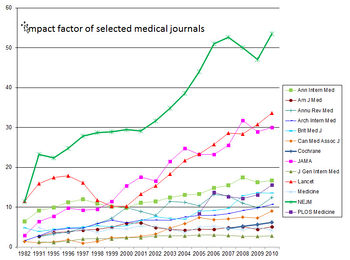

{{Image|2010 - Impact factor of selected medical journals.jpg|right|350px|Impact factor of selected medical journals since 1982.}} | |||

==Overview== | ==Overview== | ||

The Impact factor was devised by [[Eugene Garfield]], the founder of the [[Institute for Scientific Information]], now part of [[Thomson Corporation | Thomson]], a large worldwide US-based publisher. Impact factors are calculated each year by the Institute for Scientific Information for those journals which it indexes, and the factors and indices are published in ''[[Journal Citation Reports]]''. Some related values, also calculated and published by the same organization, are: | The Impact factor was devised by [[Eugene Garfield]], the founder of the [[Institute for Scientific Information]], now part of [[Thomson Corporation | Thomson]], a large worldwide US-based publisher. Impact factors are calculated each year by the Institute for Scientific Information for those journals which it indexes, and the factors and indices are published in ''[[Journal Citation Reports]]''. Some related values, also calculated and published by the same organization, are: | ||

| Line 34: | Line 37: | ||

A convenient way of thinking about it is that a journal that is cited once, on average, for each article published has an IF of 1 in the equation above. There are no articles to be averaged, just the one article. | A convenient way of thinking about it is that a journal that is cited once, on average, for each article published has an IF of 1 in the equation above. There are no articles to be averaged, just the one article. | ||

There are some nuances to this: ISI excludes certain article types (such as news items, correspondence, and errata) from the denominator. New journals, that are indexed from their first published issue, will receive an Impact Factor after the completion of two years' indexing; in this case, the citations to the year prior to Volume 1, and the number of articles published in the year prior to Volume 1 are known zero values. Journals that are indexed starting with a volume other than the first volume will not have an Impact Factor published until three complete data-years are known; annuals and other irregular publications, will sometimes publish no items in a particular year, affecting the count. The impact factor is for a specific time period; while it is appropriate for some fields of science such as [[molecular biology]], it is not for such subjects with a slower publication pattern, such as [[ecology]]. It is possible to calculate the impact factor for any desired period, and the web site gives instructions. ''Journal Citation Reports'' includes a table of the relative rank of journals by Impact factor, in each specific science discipline, such as [[organic chemistry]] or [[psychiatry]]. | There are some nuances to this: ISI excludes certain article types (such as news items, correspondence, and errata) from the denominator.<ref name="pmid19738096">{{cite journal| author=McVeigh ME, Mann SJ| title=The journal impact factor denominator: defining citable (counted) items. | journal=JAMA | year= 2009 | volume= 302 | issue= 10 | pages= 1107-9 | pmid=19738096 | ||

| url=http://www.ncbi.nlm.nih.gov/entrez/eutils/elink.fcgi?dbfrom=pubmed&retmode=ref&cmd=prlinks&id=19738096 | doi=10.1001/jama.2009.1301 }}></ref> New journals, that are indexed from their first published issue, will receive an Impact Factor after the completion of two years' indexing; in this case, the citations to the year prior to Volume 1, and the number of articles published in the year prior to Volume 1 are known zero values. Journals that are indexed starting with a volume other than the first volume will not have an Impact Factor published until three complete data-years are known; annuals and other irregular publications, will sometimes publish no items in a particular year, affecting the count. The impact factor is for a specific time period; while it is appropriate for some fields of science such as [[molecular biology]], it is not for such subjects with a slower publication pattern, such as [[ecology]]. It is possible to calculate the impact factor for any desired period, and the web site gives instructions. ''Journal Citation Reports'' includes a table of the relative rank of journals by Impact factor, in each specific science discipline, such as [[organic chemistry]] or [[psychiatry]]. | |||

The JIF can be calculated for journals not include in the JIF report.<ref name="pmid9403675">{{cite journal| author=Stegmann J| title=How to evaluate journal impact factors. | journal=Nature | year= 1997 | volume= 390 | issue= 6660 | pages= 550 | pmid=9403675 | doi=10.1038/37463 | pmc= | url= }} </ref> | |||

== Debate == | == Debate == | ||

It is sometimes useful to be able to compare different journals and research groups. For example, a sponsor of scientific research might wish to compare the results to assess the productivity of its projects. An objective measure of the importance of different publications is then required and the impact factor (or number of publications) are the only ones publicly available. However, it is important to remember that different scholarly disciplines can have very different publication and citation practices, which affect not only the number of citations, but how quickly, after publication, most articles in the subject reach their highest level of citation. In all cases, it is only relevant to consider the rank of the journal in a category of its peers, rather than the raw Impact Factor value. | It is sometimes useful to be able to compare different journals and research groups. For example, a sponsor of scientific research might wish to compare the results to assess the productivity of its projects. An objective measure of the importance of different publications is then required and the impact factor (or number of publications) are the only ones publicly available. However, it is important to remember that different scholarly disciplines can have very different publication and citation practices, which affect not only the number of citations, but how quickly, after publication, most articles in the subject reach their highest level of citation. In all cases, it is only relevant to consider the rank of the journal in a category of its peers, rather than the raw Impact Factor value. | ||

Impact factors are not infallible measures of journal quality. For example, it is unclear whether the number of citations a paper garners measures its actual quality or simply reflects the sheer number of publications in that particular area of research and whether there is a difference between them. Furthermore, in a journal which has long lag time between submission and publication, it might be impossible to cite articles within the three-year window. Indeed, for some journals, the time between submission and publication can be over two years, which leaves less than a year for citation. On the other hand, a longer temporal window would be slow to adjust to changes in journal impact factors. Thus, The impact factor is appropriate for some fields of science such as molecular biology, it is not for such subjects with a slower publication pattern, such as Ecology. (It is possible to calculate the impact factor for any desired period, and the web site gives instructions.) | Impact factors are not infallible measures of journal quality.<ref name="pmid9056804">{{cite journal |author=Seglen PO |title=Why the impact factor of journals should not be used for evaluating research |journal=BMJ |volume=314 |issue=7079 |pages=498–502 |year=1997 |month=February |pmid=9056804 |doi= |url=http://bmj.bmjjournals.com/cgi/content/full/314/7079/497 |issn=}}</ref><ref name="pmid10196574">{{cite journal |author= |title=Citation data: the wrong impact? |journal=Nat. Neurosci. |volume=1 |issue=8 |pages=641–2 |year=1998 |month=December |pmid=10196574 |doi=10.1038/3639 |url=http://dx.doi.org/10.1038/3639 |issn=}}</ref> For example, it is unclear whether the number of citations a paper garners measures its actual quality or simply reflects the sheer number of publications in that particular area of research and whether there is a difference between them. Furthermore, in a journal which has long lag time between submission and publication, it might be impossible to cite articles within the three-year window. Indeed, for some journals, the time between submission and publication can be over two years, which leaves less than a year for citation. On the other hand, a longer temporal window would be slow to adjust to changes in journal impact factors. Thus, The impact factor is appropriate for some fields of science such as molecular biology, it is not for such subjects with a slower publication pattern, such as Ecology. (It is possible to calculate the impact factor for any desired period, and the web site gives instructions.) | ||

Favorable properties of the impact factor include: | Favorable properties of the impact factor include: | ||

| Line 45: | Line 51: | ||

* Results are widely (though not freely) available to use and understand, | * Results are widely (though not freely) available to use and understand, | ||

* They are an objective measure, and have a wider acceptance than any of the alternatives. | * They are an objective measure, and have a wider acceptance than any of the alternatives. | ||

* In practice, the | * In practice, the alternative measure of quality is "prestige." This is rating by reputation, which is very slow to change, and cannot be quantified or objectively used. It merely demonstrates popularity. The impact factor is more objective, yet correlates with prestige.<ref name="pmid12572533">{{cite journal |author=Saha S, Saint S, Christakis DA |title=Impact factor: a valid measure of journal quality? |journal=J Med Libr Assoc |volume=91 |issue=1 |pages=42–6 |year=2003 |month=January |pmid=12572533 |doi= |url= |issn=}}</ref> | ||

* Impact factor is "commensurate with most proposed hierarchies of evidence".<ref name="pmid15900006">{{cite journal| author=Patsopoulos NA, Analatos AA, Ioannidis JP| title=Relative citation impact of various study designs in the health sciences. | journal=JAMA | year= 2005 | volume= 293 | issue= 19 | pages= 2362-6 | pmid=15900006 | doi=10.1001/jama.293.19.2362 | pmc= | url=http://www.ncbi.nlm.nih.gov/entrez/eutils/elink.fcgi?dbfrom=pubmed&tool=sumsearch.org/cite&retmode=ref&cmd=prlinks&id=15900006 }} </ref> | |||

* Impact factor is associated with the conduct<ref name="pmid16279896">{{cite journal| author=Gluud LL, Sørensen TI, Gøtzsche PC, Gluud C| title=The journal impact factor as a predictor of trial quality and outcomes: cohort study of hepatobiliary randomized clinical trials. | journal=Am J Gastroenterol | year= 2005 | volume= 100 | issue= 11 | pages= 2431-5 | pmid=16279896 | doi=10.1111/j.1572-0241.2005.00327.x | pmc= | url=http://www.ncbi.nlm.nih.gov/entrez/eutils/elink.fcgi?dbfrom=pubmed&tool=sumsearch.org/cite&retmode=ref&cmd=prlinks&id=16279896 }} </ref> and quality of reporting<ref name="pmid19358717">{{cite journal| author=Ethgen M, Boutron L, Steg PG, Roy C, Ravaud P| title=Quality of reporting internal and external validity data from randomized controlled trials evaluating stents for percutaneous coronary intervention. | journal=BMC Med Res Methodol | year= 2009 | volume= 9 | issue= | pages= 24 | pmid=19358717 | doi=10.1186/1471-2288-9-24 | pmc=PMC2679061 | url=http://www.ncbi.nlm.nih.gov/entrez/eutils/elink.fcgi?dbfrom=pubmed&tool=sumsearch.org/cite&retmode=ref&cmd=prlinks&id=19358717 }} </ref> of [[randomized controlled trial]]s. Some studies have not found an association.<ref name="pmid16426086">{{cite journal| author=Barbui C, Cipriani A, Malvini L, Tansella M| title=Validity of the impact factor of journals as a measure of randomized controlled trial quality. | journal=J Clin Psychiatry | year= 2006 | volume= 67 | issue= 1 | pages= 37-40 | pmid=16426086 | doi= | pmc= | url= }} </ref> | |||

* In health care, the impact factor is associated with the quality of the articles in a journal.<ref name="pmid22272156">{{cite journal| author=Lokker C, Haynes RB, Chu R, McKibbon KA, Wilczynski NL, Walter SD| title=How well are journal and clinical article characteristics associated with the journal impact factor? a retrospective cohort study. | journal=J Med Libr Assoc | year= 2012 | volume= 100 | issue= 1 | pages= 28-33 | pmid=22272156 | doi=10.3163/1536-5050.100.1.006 | pmc=PMC3257484 | url=http://www.ncbi.nlm.nih.gov/entrez/eutils/elink.fcgi?dbfrom=pubmed&tool=sumsearch.org/cite&retmode=ref&cmd=prlinks&id=22272156 }} </ref><ref name="pmid22272156">{{cite journal| author=Lokker C, Haynes RB, Chu R, McKibbon KA, Wilczynski NL, Walter SD| title=How well are journal and clinical article characteristics associated with the journal impact factor? a retrospective cohort study. | journal=J Med Libr Assoc | year= 2012 | volume= 100 | issue= 1 | pages= 28-33 | pmid=22272156 | doi=10.3163/1536-5050.100.1.006 | pmc=PMC3257484 | url=http://www.ncbi.nlm.nih.gov/entrez/eutils/elink.fcgi?dbfrom=pubmed&tool=sumsearch.org/cite&retmode=ref&cmd=prlinks&id=22272156 }} </ref><ref name="pmid12038918">{{cite journal| author=Lee KP, Schotland M, Bacchetti P, Bero LA| title=Association of journal quality indicators with methodological quality of clinical research articles. | journal=JAMA | year= 2002 | volume= 287 | issue= 21 | pages= 2805-8 | pmid=12038918 | doi= | pmc= | url=http://www.ncbi.nlm.nih.gov/entrez/eutils/elink.fcgi?dbfrom=pubmed&tool=sumsearch.org/cite&retmode=ref&cmd=prlinks&id=12038918 }} </ref> | |||

The most commonly mentioned faults of the impact factor include: | The most commonly mentioned faults of the impact factor include:<ref name="pmid9056804">{{cite journal |author=Seglen PO |title=Why the impact factor of journals should not be used for evaluating research |journal=BMJ |volume=314 |issue=7079 |pages=498–502 |year=1997 |month=February |pmid=9056804 |doi= |url=http://bmj.bmjjournals.com/cgi/content/full/314/7079/497 |issn=}}</ref><ref name="pmid10196574">{{cite journal |author= |title=Citation data: the wrong impact? |journal=Nat. Neurosci. |volume=1 |issue=8 |pages=641–2 |year=1998 |month=December |pmid=10196574 |doi=10.1038/3639 |url=http://dx.doi.org/10.1038/3639 |issn=}}</ref> | ||

* ISI's inadequate international coverage. Although Web of Knowledge indexes journals from 60 countries, the coverage is very uneven. Very few publications from other languages than English are included, and very few journals from the less-developed countries. Even the ones that are included are undercounted, because most of the citations to such journals will come from other journals in the same language or from the same country, most of which are not included. | * ISI's inadequate international coverage. Although Web of Knowledge indexes journals from 60 countries, the coverage is very uneven. Very few publications from other languages than English are included, and very few journals from the less-developed countries. Even the ones that are included are undercounted, because most of the citations to such journals will come from other journals in the same language or from the same country, most of which are not included. | ||

* The number of citations to papers in a particular journal does not really directly measure the true quality of a journal, much less the scientific merit of the papers within it. It also reflects, at least in part, the intensity of publication or citation in that area, and the current popularity of that particular topic, along with the availability of particular journals. Journals with low circulation, regardless of the scientific merit of their contents, will never obtain high impact factors in an absolute sense, but if all the journals in a specific subject are of low circulation, as in some areas of [[botany ]]and [[zoology]], the relative standing is meaningful. Since defining the quality of an academic publication is problematic, involving non-quantifiable factors, such as the influence on the next generation of scientists, assigning this value a specific numeric measure is cannot tell the whole story. | * The number of citations to papers in a particular journal does not really directly measure the true quality of a journal, much less the scientific merit of the papers within it. It also reflects, at least in part, the intensity of publication or citation in that area, and the current popularity of that particular topic, along with the availability of particular journals. Journals with low circulation, regardless of the scientific merit of their contents, will never obtain high impact factors in an absolute sense, but if all the journals in a specific subject are of low circulation, as in some areas of [[botany ]]and [[zoology]], the relative standing is meaningful. Since defining the quality of an academic publication is problematic, involving non-quantifiable factors, such as the influence on the next generation of scientists, assigning this value a specific numeric measure is cannot tell the whole story. | ||

| Line 54: | Line 63: | ||

* By merely counting the frequency of citations per article and disregarding the prestige of the citing journals, the impact factor becomes merely a metric of popularity, not of prestige. | * By merely counting the frequency of citations per article and disregarding the prestige of the citing journals, the impact factor becomes merely a metric of popularity, not of prestige. | ||

===Misuse of | ===Misuse of impact factor=== | ||

* Citation counting may distort beliefs, "unfounded authority was established by citation bias against papers that refuted or weakened the belief; amplification, the marked expansion of the belief system by papers presenting no data addressing it; and forms of invention such as the conversion of hypothesis into fact through citation alone."<ref name="pmid19622839">{{cite journal |author=Greenberg SA |title=How citation distortions create unfounded authority: analysis of a citation network |journal=BMJ |volume=339 |issue= |pages=b2680 |year=2009 |pmid=19622839 |doi= |url= |issn=}}</ref> | |||

* The absolute value of an impact factor is meaningless. A journal with an IF of 2 would not be very impressive in [[Microbiology]], while it would in [[Oceanography]]. Such values are nonetheless sometimes advertised by scientific publishers. | * The absolute value of an impact factor is meaningless. A journal with an IF of 2 would not be very impressive in [[Microbiology]], while it would in [[Oceanography]]. Such values are nonetheless sometimes advertised by scientific publishers. | ||

* The comparison of Impact factors between different fields is invalid. Yet such comparisons have been widely used for the evaluation of not merely journals, but of scientists and of university departments. It is not possible to say, for example, that a department whose publications have an average IF below 2 is low-level. This would not make sense for [[Mechanical Engineering]], where only two review journals attain such a value. | * The comparison of Impact factors between different fields is invalid. Yet such comparisons have been widely used for the evaluation of not merely journals, but of scientists and of university departments. It is not possible to say, for example, that a department whose publications have an average IF below 2 is low-level. This would not make sense for [[Mechanical Engineering]], where only two review journals attain such a value. | ||

| Line 65: | Line 75: | ||

=== Alternatives === | === Alternatives === | ||

For discussion of alternatives to the impact factor, see [[academic journal]]. | |||

== Manipulation of impact factors == | == Manipulation of impact factors == | ||

A journal can adopt editorial policies that increase its impact factor. <ref>[http://chronicle.com/free/v52/i08/08a01201.htm The Number That's Devouring Science], ''[[The Chronicle of Higher Education]]'', Richard Monastersky, October 14, 2005</ref> These editorial policies may not solely involve improving the quality of published scientific work. Journals sometimes may publish a larger percentage of review articles. While many research articles remain uncited after 3 years, nearly all review articles receive at least one citation within three years of publication, therefore review articles can raise the impact factor of the journal. [http://thomsonscientific.com/knowtrend/essays/journalcitationreports/impactfactor/ The Thomson website] gives directions for removing these journals from the calculation. For researchers or students having even a slight familiarity with the field, the review journals will be obvious. | A journal can adopt editorial policies that increase its impact factor. <ref>[http://chronicle.com/free/v52/i08/08a01201.htm The Number That's Devouring Science], ''[[The Chronicle of Higher Education]]'', Richard Monastersky, October 14, 2005</ref> These editorial policies may not solely involve improving the quality of published scientific work. Journals sometimes may publish a larger percentage of review articles. While many research articles remain uncited after 3 years, nearly all review articles receive at least one citation within three years of publication, therefore review articles can raise the impact factor of the journal. [http://thomsonscientific.com/knowtrend/essays/journalcitationreports/impactfactor/ The Thomson website] gives directions for removing these journals from the calculation. For researchers or students having even a slight familiarity with the field, the review journals will be obvious. | ||

Publication by journals of industry-supported randomised trials may increase the impact factor.<ref name="pmid21048986">{{cite journal| author=Lundh A, Barbateskovic M, Hróbjartsson A, Gøtzsche PC| title=Conflicts of interest at medical journals: the influence of industry-supported randomised trials on journal impact factors and revenue - cohort study. | journal=PLoS Med | year= 2010 | volume= 7 | issue= 10 | pages= e1000354 | pmid=21048986 | doi=10.1371/journal.pmed.1000354 | pmc=PMC2964336 | url= }} </ref> | |||

Editorials in a journal do not count as publications. However when they cite published articles, often articles from the same journal, those citations increase the citation count for the article. This effect is hard to evaluate, for the distinction between editorial comment and short original articles is not obvious. "Letters to the editor"" might refer to either class. | Editorials in a journal do not count as publications. However when they cite published articles, often articles from the same journal, those citations increase the citation count for the article. This effect is hard to evaluate, for the distinction between editorial comment and short original articles is not obvious. "Letters to the editor"" might refer to either class. | ||

| Line 102: | Line 100: | ||

</blockquote> | </blockquote> | ||

This emphasizes the fact that the impact factor refers to the average number of citations per paper, and | This emphasizes the fact that the impact factor refers to the average number of citations per paper, and the [[probability distribution]] of that number is not [[Normal distribution|Gaussian]]. Most papers published in a high impact factor journal will ultimately be cited many fewer times than the impact factor may seem to suggest, and some will not be cited at all. Therefore the Impact Factor of the source journal should not be used as a substitute measure of the citation impact of individual articles in the journal. | ||

== Use in scientific employment == | == Use in scientific employment == | ||

Though the impact factor was originally intended as an objective measure of the reputability of a journal (Garfield), it is now being increasingly applied to measure the productivity of scientists. The way it is customarily used is to examine the impact factors of the journals in which the scientist's articles have been publisher. This has obvious appeal for an academic administrator who knows neither the subject nor the journals. A novel factor called the H-factor, invented by Hirsch, seems more | Though the impact factor was originally intended as an objective measure of the reputability of a journal (Garfield), it is now being increasingly applied to measure the productivity of scientists. The way it is customarily used is to examine the impact factors of the journals in which the scientist's articles have been publisher. This has obvious appeal for an academic administrator who knows neither the subject nor the journals. A novel factor called the H-factor, invented by Hirsch, seems more appropriate to describe the impact of individual scientists. If a scientist has published ''n'' articles which all have been cited at least ''n'' times, then he will have a H-factor of ''n''. The advantage is that it rewards publication of many good articles but not many lousy articles, and it is difficult to increase the H-factor by self citations. It also describes your actual impact and not the journals you are publishing in. One or few lucky "punches" will not alone give a high H-factor. The disadvantage is that the H-factor first becomes reliable when you have a substantial production, similar to assistant/associate professor level. It is also important to emphazise that a single number would never be perfect to describe a scientist, just as IQ does not tell everything about intelligence, but the H-factor is one of the best tries. | ||

==References== | ==References== | ||

<references/> | <references/>[[Category:Suggestion Bot Tag]] | ||

[[Category: | |||

Latest revision as of 16:00, 6 September 2024

The journal impact factor, very often abbreviated IF (dropping the journal in its name), is a measure of the citations to science and social science journals.[1][2] It is frequently used as a proxy for the importance of a journal to its field.

Overview

The Impact factor was devised by Eugene Garfield, the founder of the Institute for Scientific Information, now part of Thomson, a large worldwide US-based publisher. Impact factors are calculated each year by the Institute for Scientific Information for those journals which it indexes, and the factors and indices are published in Journal Citation Reports. Some related values, also calculated and published by the same organization, are:

- the immediacy index: the average citation number of an article in that year

- the journal cited half-life: the median age of the articles that were cited in Journal Citation Reports each year. For example, if a journal's half-life in 2005 is 5, that means the citations from 2001-2005 are 50% of all the citations from that journal in 2005.

- the aggregate impact factor for a subject category: it is calculated taking into account the number of citations to all journals in the subject category and the number of articles from all the journals in the subject category.

These measures apply only to journals, not individual articles. The relative number of citations an individual article receives is better viewed as citation impact.

It is however possible to measure the Impact factor of the journals in which a particular person has published articles. This use is widespread, but controversial. Eugene Garfield warns about the "misuse in evaluating individuals" because there is "a wide variation from article to article within a single journal".[3]

Impact factors have a huge, but controversial, influence on the way published scientific research is perceived and evaluated.

Calculation

The impact factor for a journal is calculated based on a three-year period, and can be considered to be the average number of times published papers are cited up to two years after publication. For example, the 2003 impact factor for a journal would be calculated as follows:

- A = the number of times articles published in 2001-2 were cited in indexed journals during 2003

- B = the number of articles, reviews, proceedings or notes published in 2001-2

- 2003 impact factor = A/B

- (note that the 2003 impact factor was actually published in 2004, because it could not be calculated until all of the 2003 publications had been received.)

A convenient way of thinking about it is that a journal that is cited once, on average, for each article published has an IF of 1 in the equation above. There are no articles to be averaged, just the one article.

There are some nuances to this: ISI excludes certain article types (such as news items, correspondence, and errata) from the denominator.[4] New journals, that are indexed from their first published issue, will receive an Impact Factor after the completion of two years' indexing; in this case, the citations to the year prior to Volume 1, and the number of articles published in the year prior to Volume 1 are known zero values. Journals that are indexed starting with a volume other than the first volume will not have an Impact Factor published until three complete data-years are known; annuals and other irregular publications, will sometimes publish no items in a particular year, affecting the count. The impact factor is for a specific time period; while it is appropriate for some fields of science such as molecular biology, it is not for such subjects with a slower publication pattern, such as ecology. It is possible to calculate the impact factor for any desired period, and the web site gives instructions. Journal Citation Reports includes a table of the relative rank of journals by Impact factor, in each specific science discipline, such as organic chemistry or psychiatry.

The JIF can be calculated for journals not include in the JIF report.[5]

Debate

It is sometimes useful to be able to compare different journals and research groups. For example, a sponsor of scientific research might wish to compare the results to assess the productivity of its projects. An objective measure of the importance of different publications is then required and the impact factor (or number of publications) are the only ones publicly available. However, it is important to remember that different scholarly disciplines can have very different publication and citation practices, which affect not only the number of citations, but how quickly, after publication, most articles in the subject reach their highest level of citation. In all cases, it is only relevant to consider the rank of the journal in a category of its peers, rather than the raw Impact Factor value.

Impact factors are not infallible measures of journal quality.[6][7] For example, it is unclear whether the number of citations a paper garners measures its actual quality or simply reflects the sheer number of publications in that particular area of research and whether there is a difference between them. Furthermore, in a journal which has long lag time between submission and publication, it might be impossible to cite articles within the three-year window. Indeed, for some journals, the time between submission and publication can be over two years, which leaves less than a year for citation. On the other hand, a longer temporal window would be slow to adjust to changes in journal impact factors. Thus, The impact factor is appropriate for some fields of science such as molecular biology, it is not for such subjects with a slower publication pattern, such as Ecology. (It is possible to calculate the impact factor for any desired period, and the web site gives instructions.)

Favorable properties of the impact factor include:

- ISI's wide international coverage. Web of Knowledge indexes 9000 science and social science journals from 60 countries. This is perhaps only partially correct: see below.

- Results are widely (though not freely) available to use and understand,

- They are an objective measure, and have a wider acceptance than any of the alternatives.

- In practice, the alternative measure of quality is "prestige." This is rating by reputation, which is very slow to change, and cannot be quantified or objectively used. It merely demonstrates popularity. The impact factor is more objective, yet correlates with prestige.[8]

- Impact factor is "commensurate with most proposed hierarchies of evidence".[9]

- Impact factor is associated with the conduct[10] and quality of reporting[11] of randomized controlled trials. Some studies have not found an association.[12]

- In health care, the impact factor is associated with the quality of the articles in a journal.[13][13][14]

The most commonly mentioned faults of the impact factor include:[6][7]

- ISI's inadequate international coverage. Although Web of Knowledge indexes journals from 60 countries, the coverage is very uneven. Very few publications from other languages than English are included, and very few journals from the less-developed countries. Even the ones that are included are undercounted, because most of the citations to such journals will come from other journals in the same language or from the same country, most of which are not included.

- The number of citations to papers in a particular journal does not really directly measure the true quality of a journal, much less the scientific merit of the papers within it. It also reflects, at least in part, the intensity of publication or citation in that area, and the current popularity of that particular topic, along with the availability of particular journals. Journals with low circulation, regardless of the scientific merit of their contents, will never obtain high impact factors in an absolute sense, but if all the journals in a specific subject are of low circulation, as in some areas of botany and zoology, the relative standing is meaningful. Since defining the quality of an academic publication is problematic, involving non-quantifiable factors, such as the influence on the next generation of scientists, assigning this value a specific numeric measure is cannot tell the whole story.

- The temporal window for citation is too short, as discussed above. Classic articles are cited frequently even after several decades, but this should not affect specific journals.

- The absolute number of researchers, the average number of authors on each paper, and the nature of results in different research areas, as well as variations in citation habits between different disciplines, particularly the number of citations in each paper, all combine to make impact factors between different groups of scientists incommensurable. Generally, for example, medical journals have higher impact factors than mathematical journals and engineering journals. This limitation is accepted by the publishers; it has never been claimed that they are useful between fields--such a use is an indication of misunderstanding.

- By merely counting the frequency of citations per article and disregarding the prestige of the citing journals, the impact factor becomes merely a metric of popularity, not of prestige.

Misuse of impact factor

- Citation counting may distort beliefs, "unfounded authority was established by citation bias against papers that refuted or weakened the belief; amplification, the marked expansion of the belief system by papers presenting no data addressing it; and forms of invention such as the conversion of hypothesis into fact through citation alone."[15]

- The absolute value of an impact factor is meaningless. A journal with an IF of 2 would not be very impressive in Microbiology, while it would in Oceanography. Such values are nonetheless sometimes advertised by scientific publishers.

- The comparison of Impact factors between different fields is invalid. Yet such comparisons have been widely used for the evaluation of not merely journals, but of scientists and of university departments. It is not possible to say, for example, that a department whose publications have an average IF below 2 is low-level. This would not make sense for Mechanical Engineering, where only two review journals attain such a value.

- Outside the sciences, impact factors are relevant for fields that have a similar publication pattern to the sciences (such as economics) , where research publications are almost always journal articles, that cite other journal articles. They are not relevant for literature, where the most important publications are books citing other books. Therefore, ISI does not publish a JCR for the humanities.

- Even in the sciences, it is not fully relevant to fields, such as some in engineering, where the principal scientific output is conference proceedings , technical reports, and patents.

- Since only the ISI database journals are used, it undercounts the number of citations from journals in less-developed countries, and less-universal languages.

- It cannot correctly be the only thing to be considered by libraries in selecting journals. The local usefulness of the journal is at least equally important.

Alternatives

For discussion of alternatives to the impact factor, see academic journal.

Manipulation of impact factors

A journal can adopt editorial policies that increase its impact factor. [16] These editorial policies may not solely involve improving the quality of published scientific work. Journals sometimes may publish a larger percentage of review articles. While many research articles remain uncited after 3 years, nearly all review articles receive at least one citation within three years of publication, therefore review articles can raise the impact factor of the journal. The Thomson website gives directions for removing these journals from the calculation. For researchers or students having even a slight familiarity with the field, the review journals will be obvious.

Publication by journals of industry-supported randomised trials may increase the impact factor.[17]

Editorials in a journal do not count as publications. However when they cite published articles, often articles from the same journal, those citations increase the citation count for the article. This effect is hard to evaluate, for the distinction between editorial comment and short original articles is not obvious. "Letters to the editor"" might refer to either class.

An editor of a journal may encourage authors to cite articles from that journal in the papers they submit. The degree to which this practice affects the citation count and impact factor included in the Journal Citation Reports cited journal data must therefore be examined. Most of these factors are thoroughly discussed on the site's help pages, along with ways for correcting the figures for these effects if desired. However,it is normal for articles in a journal to cite primarily its own articles, for those are the ones of the same merit in the same special field. If done artificially, the effect will only be significant for the journals with the lowest citations, and affect the placement only at the bottom of the list.

Skewness

An editorial in Nature (Vol 435, pp 1003-1004 , 23 June 2005) stated [18]

For example, we have analysed the citations of individual papers in Nature and found that 89% of last year’s figure was generated by just 25% of our papers. The most cited Nature paper from 2002−03 was the mouse genome, published in December 2002. That paper represents the culmination of a great enterprise, but is inevitably an important point of reference rather than an expression of unusually deep mechanistic insight. So far it has received more than 1,000 citations. Within the measurement year of 2004 alone, it received 522 citations. Our next most cited paper from 2002−03 (concerning the functional organization of the yeast proteome) received 351 citations that year. Only 50 out of the roughly 1,800 citable items published in those two years received more than 100 citations in 2004. The great majority of our papers received fewer than 20 citations.

This emphasizes the fact that the impact factor refers to the average number of citations per paper, and the probability distribution of that number is not Gaussian. Most papers published in a high impact factor journal will ultimately be cited many fewer times than the impact factor may seem to suggest, and some will not be cited at all. Therefore the Impact Factor of the source journal should not be used as a substitute measure of the citation impact of individual articles in the journal.

Use in scientific employment

Though the impact factor was originally intended as an objective measure of the reputability of a journal (Garfield), it is now being increasingly applied to measure the productivity of scientists. The way it is customarily used is to examine the impact factors of the journals in which the scientist's articles have been publisher. This has obvious appeal for an academic administrator who knows neither the subject nor the journals. A novel factor called the H-factor, invented by Hirsch, seems more appropriate to describe the impact of individual scientists. If a scientist has published n articles which all have been cited at least n times, then he will have a H-factor of n. The advantage is that it rewards publication of many good articles but not many lousy articles, and it is difficult to increase the H-factor by self citations. It also describes your actual impact and not the journals you are publishing in. One or few lucky "punches" will not alone give a high H-factor. The disadvantage is that the H-factor first becomes reliable when you have a substantial production, similar to assistant/associate professor level. It is also important to emphazise that a single number would never be perfect to describe a scientist, just as IQ does not tell everything about intelligence, but the H-factor is one of the best tries.

References

- ↑ Garfield E (2006). "The history and meaning of the journal impact factor.". JAMA 295 (1): 90-3. DOI:10.1001/jama.295.1.90. PMID 16391221. Research Blogging.

- ↑ Dong P, Loh M, Mondry A (December 2005). "The "impact factor" revisited". Biomed Digit Libr 2: 7. DOI:10.1186/1742-5581-2-7. PMID 16324222. Research Blogging.

- ↑ Eugen Garfield (June 1998). "Der Impact Faktor und seine richtige Anwendung". Der Unfallchirurg 101 (6): 413-414.

- ↑ McVeigh ME, Mann SJ (2009). "The journal impact factor denominator: defining citable (counted) items.". JAMA 302 (10): 1107-9. DOI:10.1001/jama.2009.1301. PMID 19738096. Research Blogging. >

- ↑ Stegmann J (1997). "How to evaluate journal impact factors.". Nature 390 (6660): 550. DOI:10.1038/37463. PMID 9403675. Research Blogging.

- ↑ 6.0 6.1 Seglen PO (February 1997). "Why the impact factor of journals should not be used for evaluating research". BMJ 314 (7079): 498–502. PMID 9056804. [e]

- ↑ 7.0 7.1 (December 1998) "Citation data: the wrong impact?". Nat. Neurosci. 1 (8): 641–2. DOI:10.1038/3639. PMID 10196574. Research Blogging.

- ↑ Saha S, Saint S, Christakis DA (January 2003). "Impact factor: a valid measure of journal quality?". J Med Libr Assoc 91 (1): 42–6. PMID 12572533. [e]

- ↑ Patsopoulos NA, Analatos AA, Ioannidis JP (2005). "Relative citation impact of various study designs in the health sciences.". JAMA 293 (19): 2362-6. DOI:10.1001/jama.293.19.2362. PMID 15900006. Research Blogging.

- ↑ Gluud LL, Sørensen TI, Gøtzsche PC, Gluud C (2005). "The journal impact factor as a predictor of trial quality and outcomes: cohort study of hepatobiliary randomized clinical trials.". Am J Gastroenterol 100 (11): 2431-5. DOI:10.1111/j.1572-0241.2005.00327.x. PMID 16279896. Research Blogging.

- ↑ Ethgen M, Boutron L, Steg PG, Roy C, Ravaud P (2009). "Quality of reporting internal and external validity data from randomized controlled trials evaluating stents for percutaneous coronary intervention.". BMC Med Res Methodol 9: 24. DOI:10.1186/1471-2288-9-24. PMID 19358717. PMC PMC2679061. Research Blogging.

- ↑ Barbui C, Cipriani A, Malvini L, Tansella M (2006). "Validity of the impact factor of journals as a measure of randomized controlled trial quality.". J Clin Psychiatry 67 (1): 37-40. PMID 16426086. [e]

- ↑ 13.0 13.1 Lokker C, Haynes RB, Chu R, McKibbon KA, Wilczynski NL, Walter SD (2012). "How well are journal and clinical article characteristics associated with the journal impact factor? a retrospective cohort study.". J Med Libr Assoc 100 (1): 28-33. DOI:10.3163/1536-5050.100.1.006. PMID 22272156. PMC PMC3257484. Research Blogging.

- ↑ Lee KP, Schotland M, Bacchetti P, Bero LA (2002). "Association of journal quality indicators with methodological quality of clinical research articles.". JAMA 287 (21): 2805-8. PMID 12038918. [e]

- ↑ Greenberg SA (2009). "How citation distortions create unfounded authority: analysis of a citation network". BMJ 339: b2680. PMID 19622839. [e]

- ↑ The Number That's Devouring Science, The Chronicle of Higher Education, Richard Monastersky, October 14, 2005

- ↑ Lundh A, Barbateskovic M, Hróbjartsson A, Gøtzsche PC (2010). "Conflicts of interest at medical journals: the influence of industry-supported randomised trials on journal impact factors and revenue - cohort study.". PLoS Med 7 (10): e1000354. DOI:10.1371/journal.pmed.1000354. PMID 21048986. PMC PMC2964336. Research Blogging.

- ↑ (2005-06-23) "Impact factor: a valid measure of journal quality?". Nature 435: 1003-1004.